Robust Action Governor for Uncertain Piecewise Affine Systems with Non-convex Constraints and Safe Reinforcement Learning

Paper and Code

Jul 17, 2022

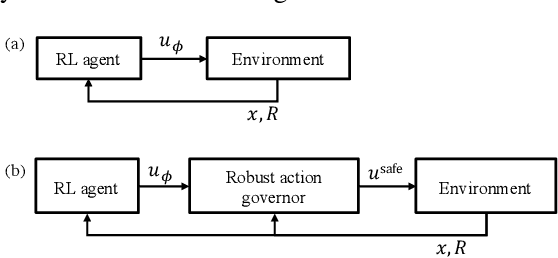

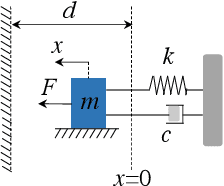

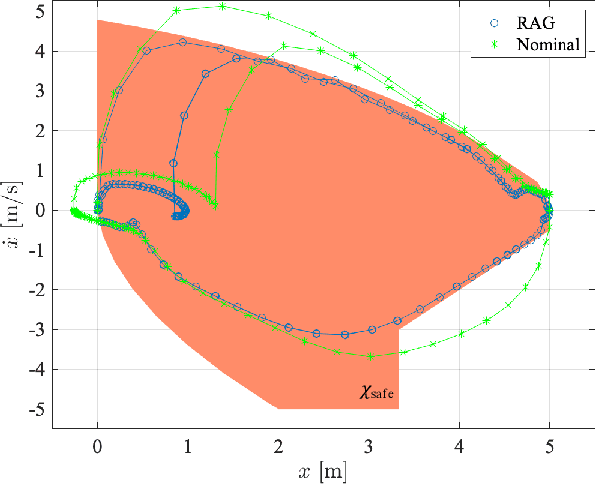

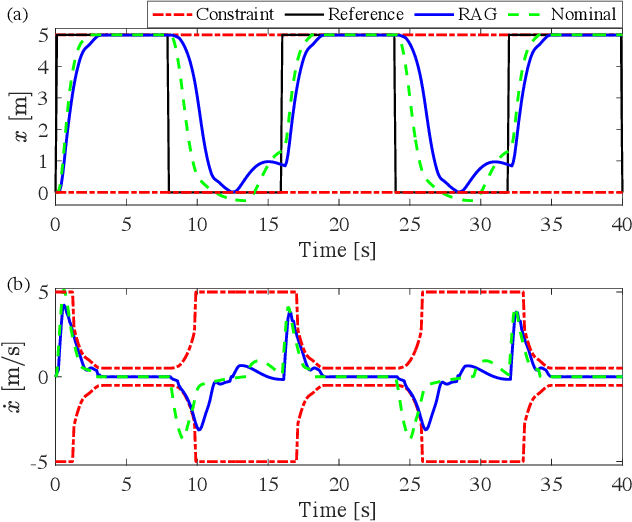

The action governor is an add-on scheme to a nominal control loop that monitors and adjusts the control actions to enforce safety specifications expressed as pointwise-in-time state and control constraints. In this paper, we introduce the Robust Action Governor (RAG) for systems the dynamics of which can be represented using discrete-time Piecewise Affine (PWA) models with both parametric and additive uncertainties and subject to non-convex constraints. We develop the theoretical properties and computational approaches for the RAG. After that, we introduce the use of the RAG for realizing safe Reinforcement Learning (RL), i.e., ensuring all-time constraint satisfaction during online RL exploration-and-exploitation process. This development enables safe real-time evolution of the control policy and adaptation to changes in the operating environment and system parameters (due to aging, damage, etc.). We illustrate the effectiveness of the RAG in constraint enforcement and safe RL using the RAG by considering their applications to a soft-landing problem of a mass-spring-damper system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge