Restore from Restored: Single Image Denoising with Pseudo Clean Image

Paper and Code

Mar 09, 2020

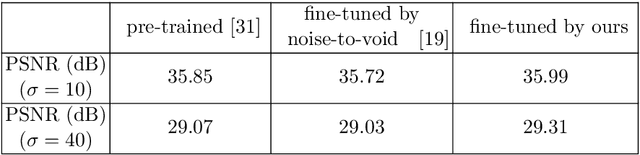

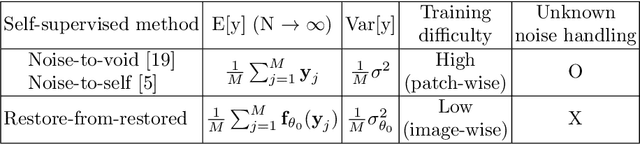

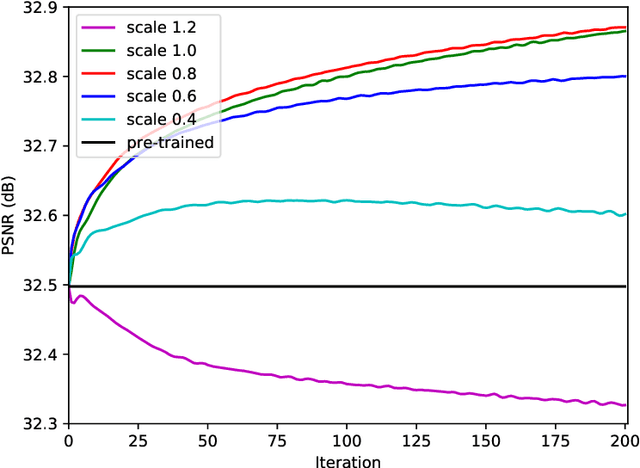

Under certain statistical assumptions of noise (e.g., zero-mean noise), recent self-supervised approaches for denoising have been introduced to learn network parameters without ground-truth clean images, and these methods can restore an image by exploiting information available from the given input (i.e., internal statistics) at test time. However, self-supervised methods are not yet properly combined with conventional supervised denoising methods which train the denoising networks with a large number of external training images. Thus, we propose a new denoising approach that can greatly outperform the state-of-the-art supervised denoising methods by adapting (fine-tuning) their network parameters to the given specific input through self-supervision without changing the fully original network architectures. We demonstrate that the proposed method can be easily employed with state-of-the-art denoising networks without additional parameters, and achieve state-of-the-art performance on numerous denoising benchmark datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge