Resource allocation optimization using artificial intelligence methods in various computing paradigms: A Review

Paper and Code

Mar 23, 2022

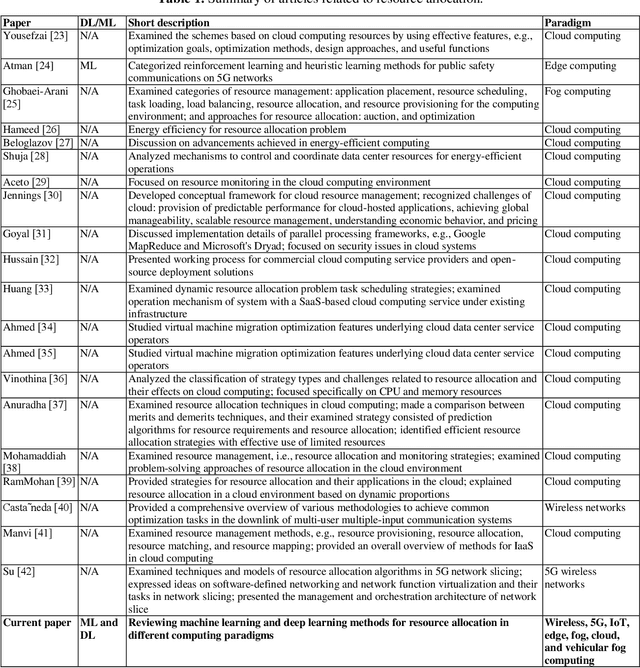

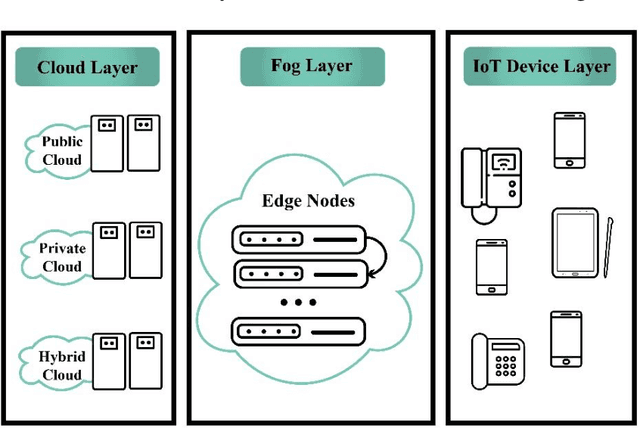

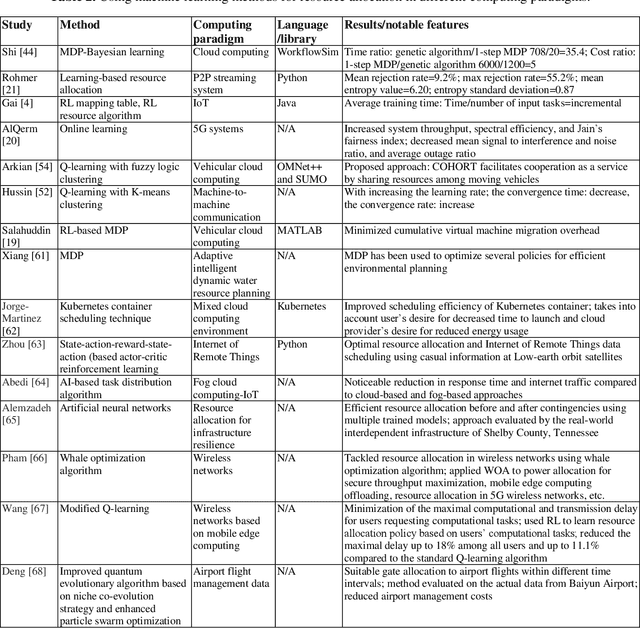

With the advent of smart devices, the demand for various computational paradigms such as the Internet of Things, fog, and cloud computing has increased. However, effective resource allocation remains challenging in these paradigms. This paper presents a comprehensive literature review on the application of artificial intelligence (AI) methods such as deep learning (DL) and machine learning (ML) for resource allocation optimization in computational paradigms. To the best of our knowledge, there are no existing reviews on AI-based resource allocation approaches in different computational paradigms. The reviewed ML-based approaches are categorized as supervised and reinforcement learning (RL). Moreover, DL-based approaches and their combination with RL are surveyed. The review ends with a discussion on open research directions and a conclusion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge