Reservoir computing approaches for representation and classification of multivariate time series

Paper and Code

Nov 06, 2018

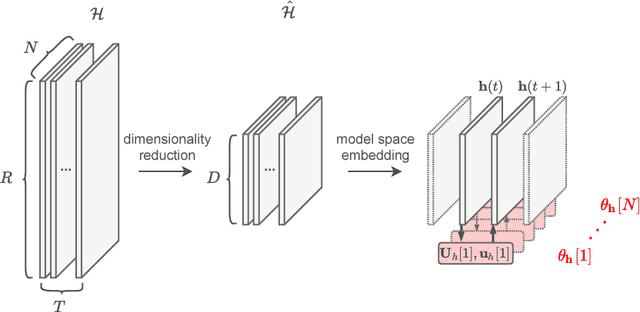

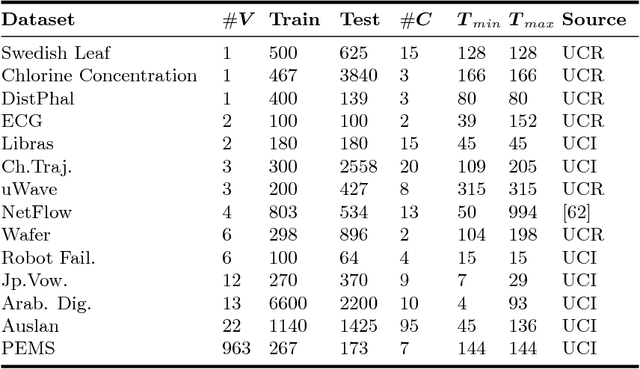

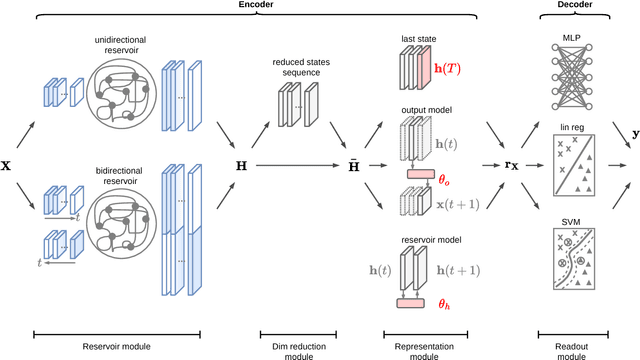

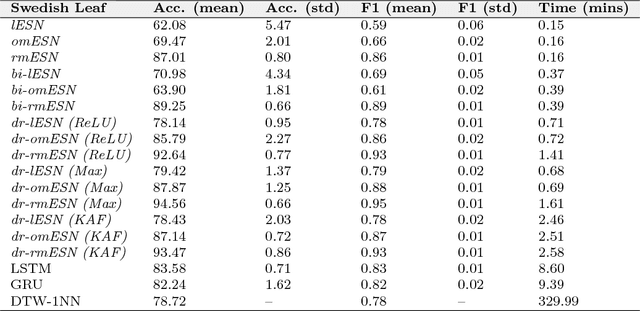

Classification of multivariate time series (MTS) has been tackled with a large variety of methodologies and applied to a wide range of scenarios. Among the existing approaches, reservoir computing (RC) techniques, which implement a fixed and high-dimensional recurrent network to process sequential data, are computationally efficient tools to generate a vectorial, fixed-size representation of the MTS that can be further processed by standard classifiers. Despite their unrivaled training speed, MTS classifiers based on a standard RC architecture fail to achieve the same accuracy of other classifiers, such as those exploiting fully trainable recurrent networks. In this paper we introduce the reservoir model space, an RC approach to learn vectorial representations of MTS in an unsupervised fashion. Each MTS is encoded within the parameters of a linear model trained to predict a low-dimensional embedding of the reservoir dynamics. Our model space yields a powerful representation of the MTS and, thanks to an intermediate dimensionality reduction procedure, attains computational performance comparable to other RC methods. As a second contribution we propose a modular RC framework for MTS classification, with an associated open source Python library. By combining the different modules it is possible to seamlessly implement advanced RC architectures, including our proposed unsupervised representation, bidirectional reservoirs, and non-linear readouts, such as deep neural networks with both fixed and flexible activation functions. Results obtained on benchmark and real-world MTS datasets show that RC classifiers are dramatically faster and, when implemented using our proposed representation, also achieve superior classification accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge