Relative Counterfactual Contrastive Learning for Mitigating Pretrained Stance Bias in Stance Detection

Paper and Code

May 16, 2024

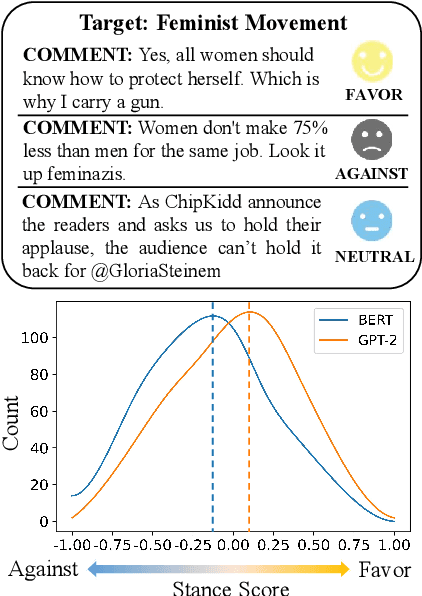

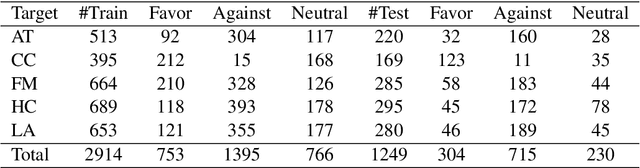

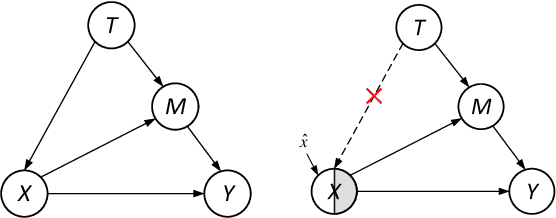

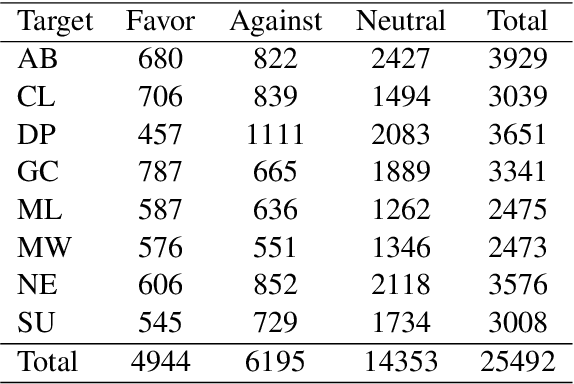

Stance detection classifies stance relations (namely, Favor, Against, or Neither) between comments and targets. Pretrained language models (PLMs) are widely used to mine the stance relation to improve the performance of stance detection through pretrained knowledge. However, PLMs also embed ``bad'' pretrained knowledge concerning stance into the extracted stance relation semantics, resulting in pretrained stance bias. It is not trivial to measure pretrained stance bias due to its weak quantifiability. In this paper, we propose Relative Counterfactual Contrastive Learning (RCCL), in which pretrained stance bias is mitigated as relative stance bias instead of absolute stance bias to overtake the difficulty of measuring bias. Firstly, we present a new structural causal model for characterizing complicated relationships among context, PLMs and stance relations to locate pretrained stance bias. Then, based on masked language model prediction, we present a target-aware relative stance sample generation method for obtaining relative bias. Finally, we use contrastive learning based on counterfactual theory to mitigate pretrained stance bias and preserve context stance relation. Experiments show that the proposed method is superior to stance detection and debiasing baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge