Refining Query Representations for Dense Retrieval at Test Time

Paper and Code

May 25, 2022

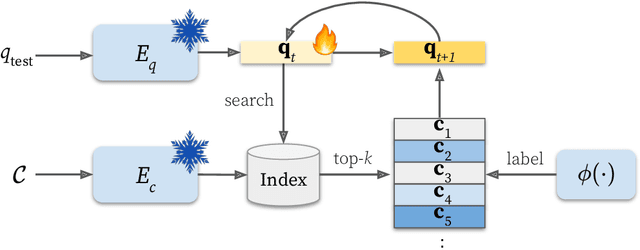

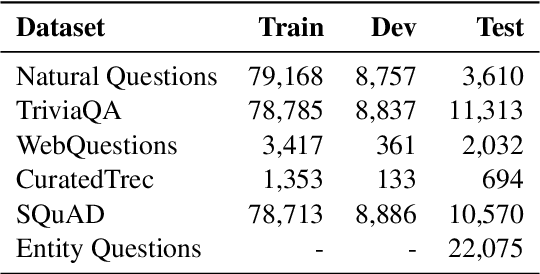

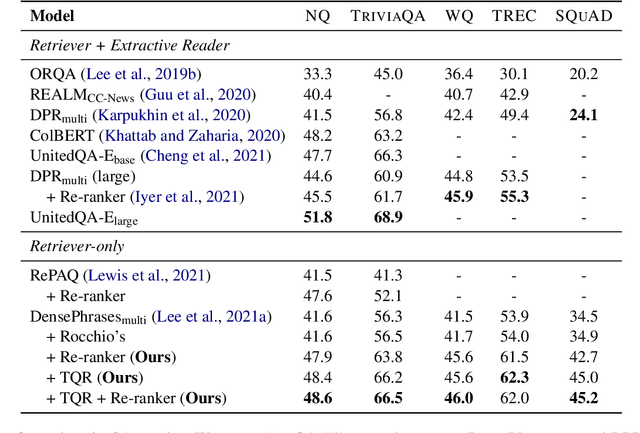

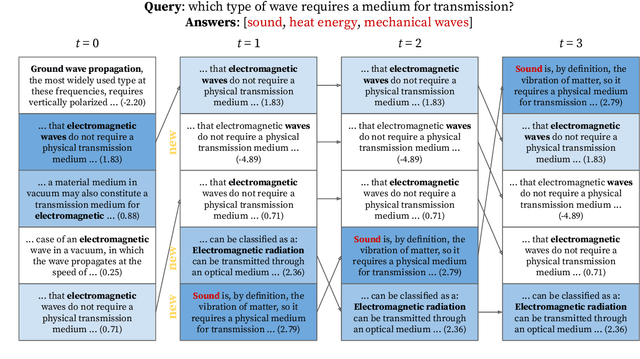

Dense retrieval uses a contrastive learning framework to learn dense representations of queries and contexts. Trained encoders are directly used for each test query, but they often fail to accurately represent out-of-domain queries. In this paper, we introduce a framework that refines instance-level query representations at test time, with only the signals coming from the intermediate retrieval results. We optimize the query representation based on the retrieval result similar to pseudo relevance feedback (PRF) in information retrieval. Specifically, we adopt a cross-encoder labeler to provide pseudo labels over the retrieval result and iteratively refine the query representation with a gradient descent method, treating each test query as a single data point to train on. Our theoretical analysis reveals that our framework can be viewed as a generalization of the classical Rocchio's algorithm for PRF, which leads us to propose interesting variants of our method. We show that our test-time query refinement strategy improves the performance of phrase retrieval (+8.1% Acc@1) and passage retrieval (+3.7% Acc@20) for open-domain QA with large improvements on out-of-domain queries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge