Recovering medical images from CT film photos

Paper and Code

Mar 10, 2022

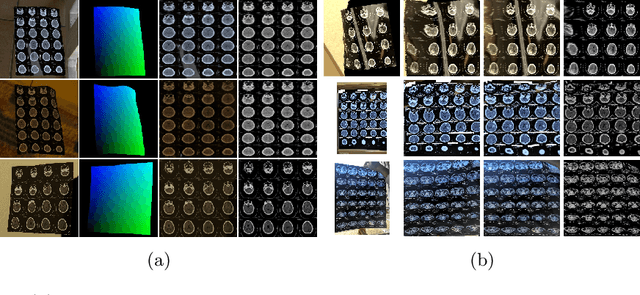

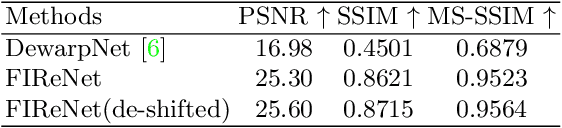

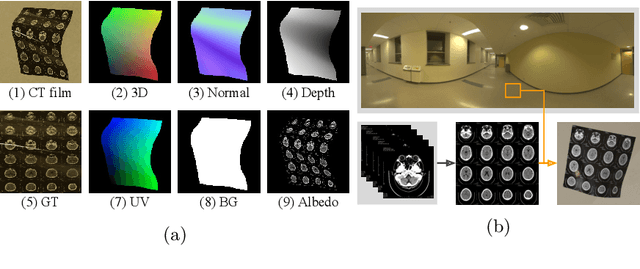

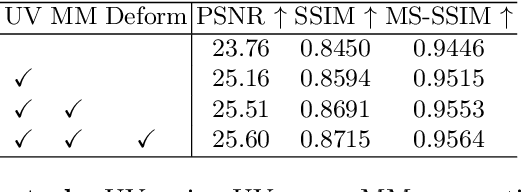

While medical images such as computed tomography (CT) are stored in DICOM format in hospital PACS, it is still quite routine in many countries to print a film as a transferable medium for the purposes of self-storage and secondary consultation. Also, with the ubiquitousness of mobile phone cameras, it is quite common to take pictures of CT films, which unfortunately suffer from geometric deformation and illumination variation. In this work, we study the problem of recovering a CT film, which marks \textbf{the first attempt} in the literature, to the best of our knowledge. We start with building a large-scale head CT film database CTFilm20K, consisting of approximately 20,000 pictures, using the widely used computer graphics software Blender. We also record all accompanying information related to the geometric deformation (such as 3D coordinate, depth, normal, and UV maps) and illumination variation (such as albedo map). Then we propose a deep framework called \textbf{F}ilm \textbf{I}mage \textbf{Re}covery \textbf{Net}work (\textbf{FIReNet}) to tackle geometric deformation and illumination variation using the multiple maps extracted from the CT films to collaboratively guide the recovery process. Finally, we convert the dewarped images to DICOM files with our cascade model for further analysis such as radiomics feature extraction. Extensive experiments demonstrate the superiority of our approach over the previous approaches. We plan to open source the simulated images and deep models for promoting the research on CT film image analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge