Real-to-Sim: Deep Learning with Auto-Tuning to Predict Residual Errors using Sparse Data

Paper and Code

Sep 07, 2022

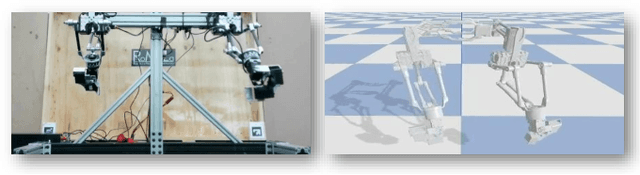

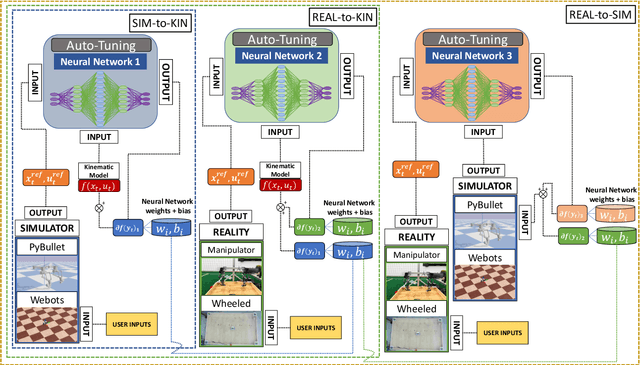

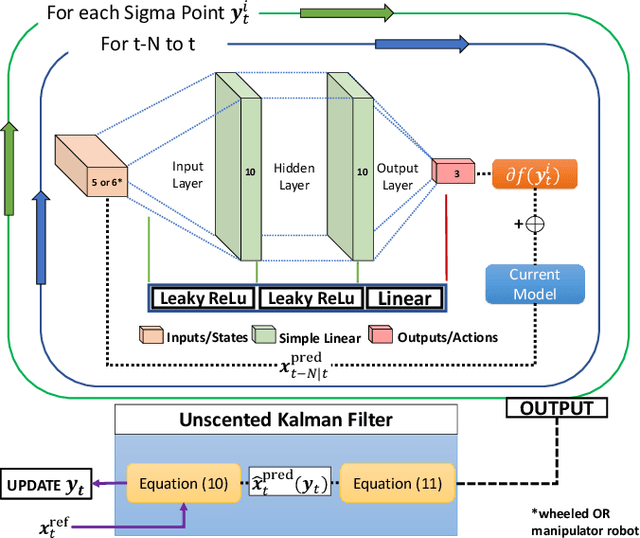

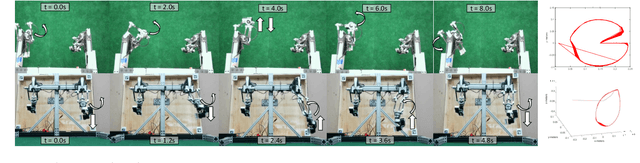

Achieving highly accurate kinematic or simulator models that are close to the real robot can facilitate model-based controls (e.g., model predictive control or linear-quadradic regulators), model-based trajectory planning (e.g., trajectory optimization), and decrease the amount of learning time necessary for reinforcement learning methods. Thus, the objective of this work is to learn the residual errors between a kinematic and/or simulator model and the real robot. This is achieved using auto-tuning and neural networks, where the parameters of a neural network are updated using an auto-tuning method that applies equations from an Unscented Kalman Filter (UKF) formulation. Using this method, we model these residual errors with only small amounts of data - a necessity as we improve the simulator/kinematic model by learning directly from hardware operation. We demonstrate our method on robotic hardware (e.g., manipulator arm), and show that with the learned residual errors, we can further close the reality gap between kinematic models, simulations, and the real robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge