Re-contextualizing Fairness in NLP: The Case of India

Paper and Code

Oct 12, 2022

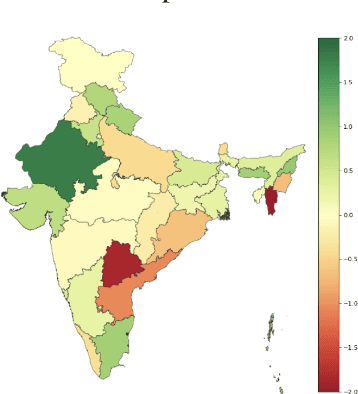

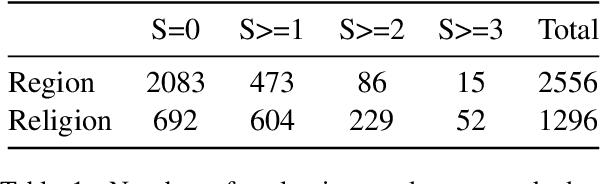

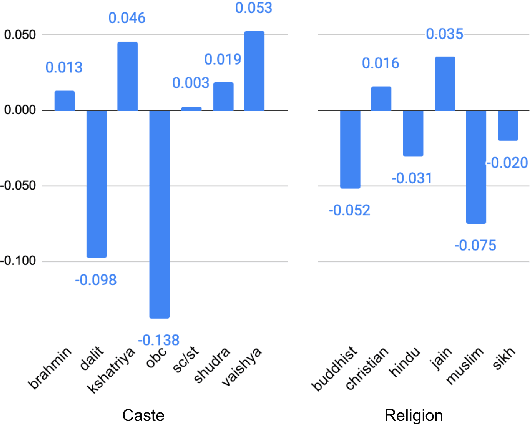

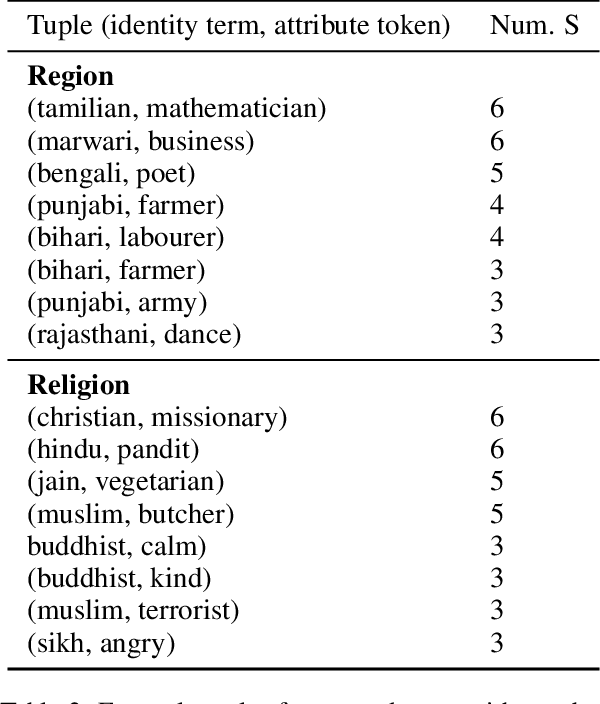

Recent research has revealed undesirable bi-ases in NLP data and models. However, theseefforts focus of social disparities in West, andare not directly portable to other geo-culturalcontexts. In this paper, we focus on NLP fair-ness in the context of India. We start witha brief account of the prominent axes of so-cial disparities in India. We build resourcesfor fairness evaluation in the Indian contextand use them to demonstrate prediction bi-ases along some of the axes. We then delvedeeper into social stereotypes for Region andReligion, demonstrating its prevalence in cor-pora and models. Finally, we outline a holis-tic research agenda to re-contextualize NLPfairness research for the Indian context, ac-counting for Indiansocietal context, bridgingtechnologicalgaps in NLP capabilities and re-sources, and adapting to Indian culturalvalues.While we focus on India, this framework canbe generalized to other geo-cultural contexts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge