RCC-GAN: Regularized Compound Conditional GAN for Large-Scale Tabular Data Synthesis

Paper and Code

May 24, 2022

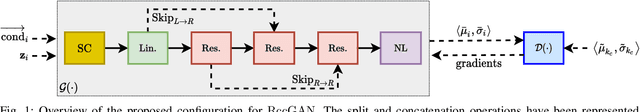

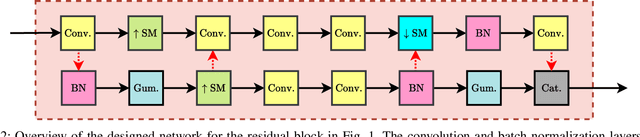

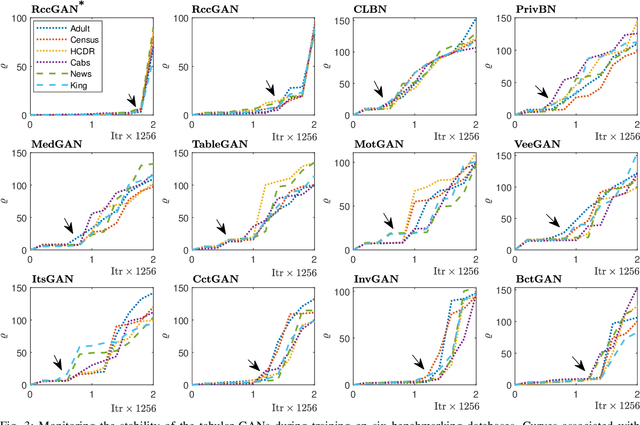

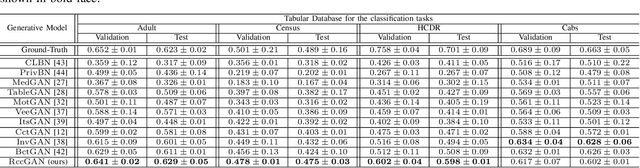

This paper introduces a novel generative adversarial network (GAN) for synthesizing large-scale tabular databases which contain various features such as continuous, discrete, and binary. Technically, our GAN belongs to the category of class-conditioned generative models with a predefined conditional vector. However, we propose a new formulation for deriving such a vector incorporating both binary and discrete features simultaneously. We refer to this noble definition as compound conditional vector and employ it for training the generator network. The core architecture of this network is a three-layered deep residual neural network with skip connections. For improving the stability of such complex architecture, we present a regularization scheme towards limiting unprecedented variations on its weight vectors during training. This regularization approach is quite compatible with the nature of adversarial training and it is not computationally prohibitive in runtime. Furthermore, we constantly monitor the variation of the weight vectors for identifying any potential instabilities or irregularities to measure the strength of our proposed regularizer. Toward this end, we also develop a new metric for tracking sudden perturbation on the weight vectors using the singular value decomposition theory. Finally, we evaluate the performance of our proposed synthesis approach on six benchmarking tabular databases, namely Adult, Census, HCDR, Cabs, News, and King. The achieved results corroborate that for the majority of the cases, our proposed RccGAN outperforms other conventional and modern generative models in terms of accuracy, stability, and reliability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge