RandomSEMO: Normality Learning Of Moving Objects For Video Anomaly Detection

Paper and Code

Feb 13, 2022

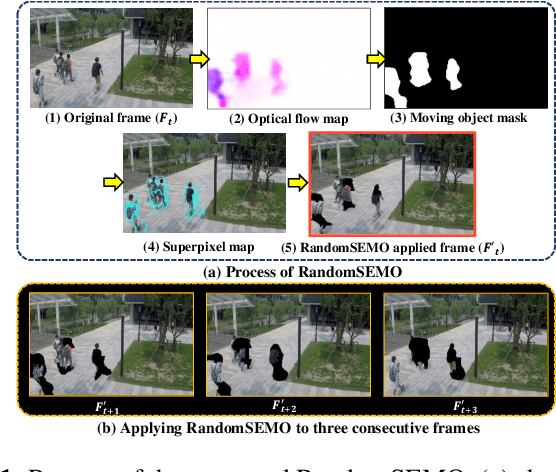

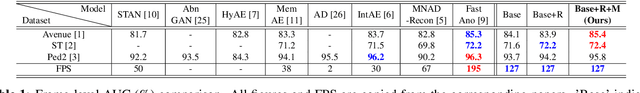

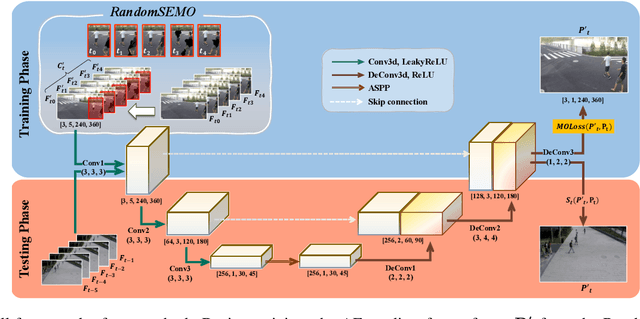

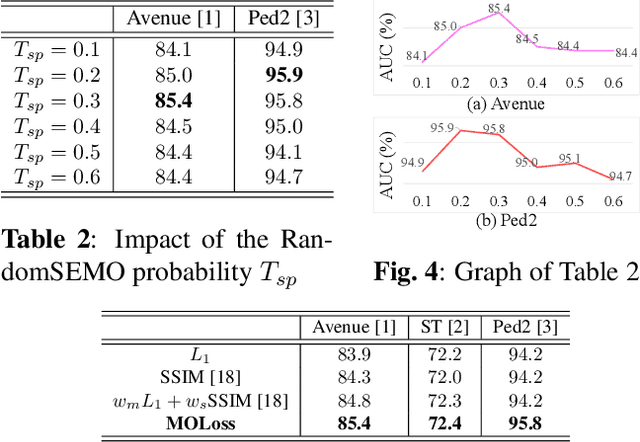

Recent anomaly detection algorithms have shown powerful performance by adopting frame predicting autoencoders. However, these methods face two challenging circumstances. First, they are likely to be trained to be excessively powerful, generating even abnormal frames well, which leads to failure in detecting anomalies. Second, they are distracted by the large number of objects captured in both foreground and background. To solve these problems, we propose a novel superpixel-based video data transformation technique named Random Superpixel Erasing on Moving Objects (RandomSEMO) and Moving Object Loss (MOLoss), built on top of a simple lightweight autoencoder. RandomSEMO is applied to the moving object regions by randomly erasing their superpixels. It enforces the network to pay attention to the foreground objects and learn the normal features more effectively, rather than simply predicting the future frame. Moreover, MOLoss urges the model to focus on learning normal objects captured within RandomSEMO by amplifying the loss on the pixels near the moving objects. The experimental results show that our model outperforms state-of-the-arts on three benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge