PSFormer: Point Transformer for 3D Salient Object Detection

Paper and Code

Oct 28, 2022

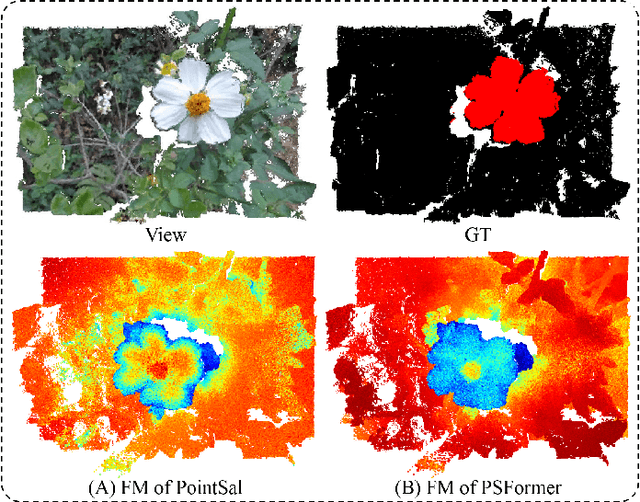

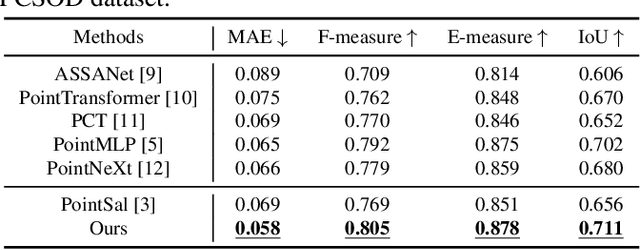

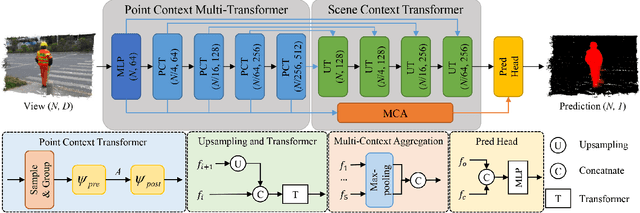

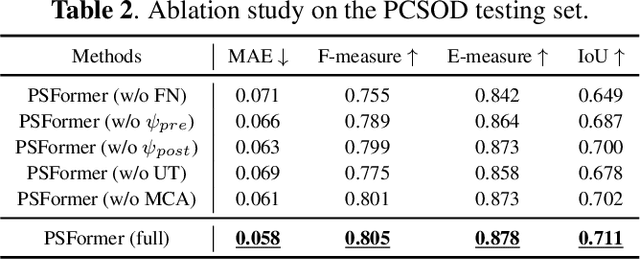

We propose PSFormer, an effective point transformer model for 3D salient object detection. PSFormer is an encoder-decoder network that takes full advantage of transformers to model the contextual information in both multi-scale point- and scene-wise manners. In the encoder, we develop a Point Context Transformer (PCT) module to capture region contextual features at the point level; PCT contains two different transformers to excavate the relationship among points. In the decoder, we develop a Scene Context Transformer (SCT) module to learn context representations at the scene level; SCT contains both Upsampling-and-Transformer blocks and Multi-context Aggregation units to integrate the global semantic and multi-level features from the encoder into the global scene context. Experiments show clear improvements of PSFormer over its competitors and validate that PSFormer is more robust to challenging cases such as small objects, multiple objects, and objects with complex structures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge