Probabilistic Model Learning and Long-term Prediction for Contact-rich Manipulation Tasks

Paper and Code

Sep 11, 2019

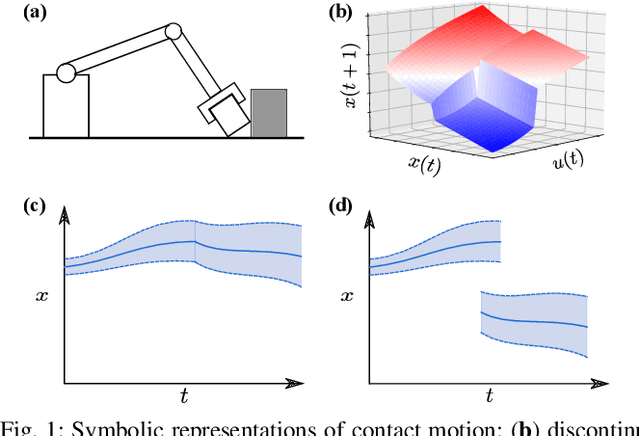

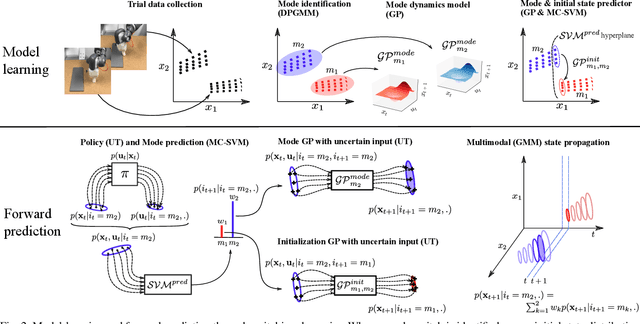

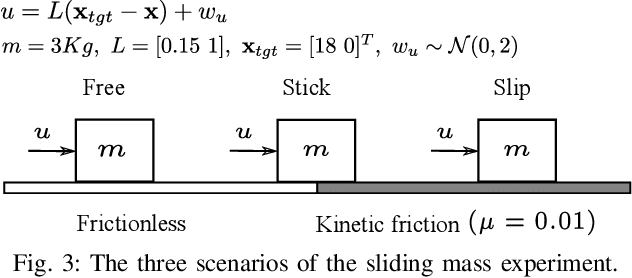

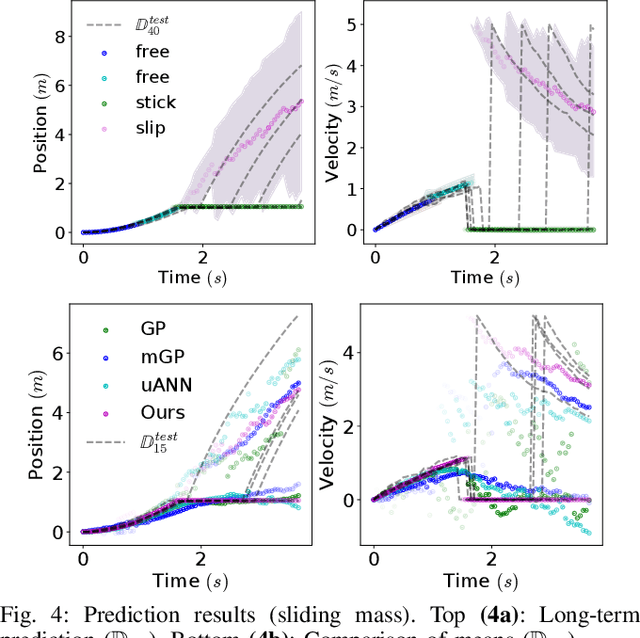

Learning dynamics models is an essential component of model-based reinforcement learning. The learned model can be used for multi-step ahead predictions of the state variable, a process referred to as long-term prediction. Due to the recursive nature of the predictions, the accuracy has to be good enough to prevent significant error buildup. Accurate model learning in contact-rich manipulation is challenging due to the presence of varying dynamics regimes and discontinuities. Another challenge is the discontinuity in state evolution caused by impacting conditions. Building on the approach of representing contact dynamics by a system of switching models, we present a solution that also supports discontinuous state evolution. We evaluate our method on a contact-rich motion task, involving a 7-DOF industrial robot, using a trajectory-centric policy and show that it can effectively propagate state distributions through discontinuities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge