Pre-demosaic Graph-based Light Field Image Compression

Paper and Code

Feb 15, 2021

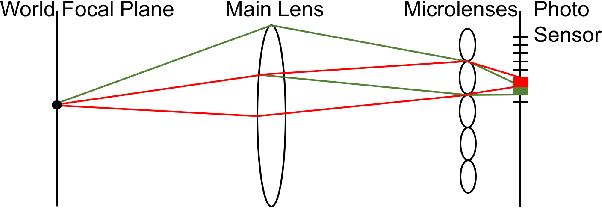

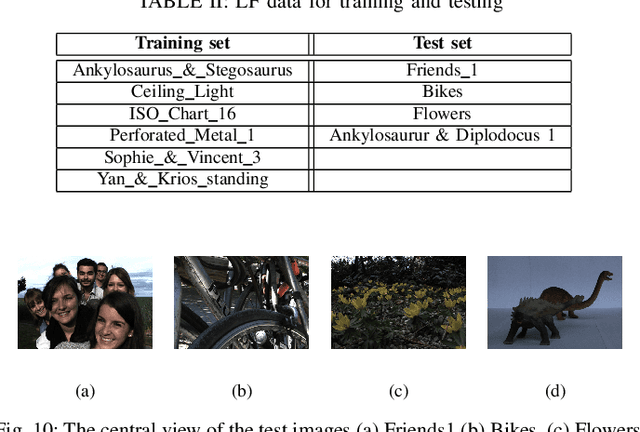

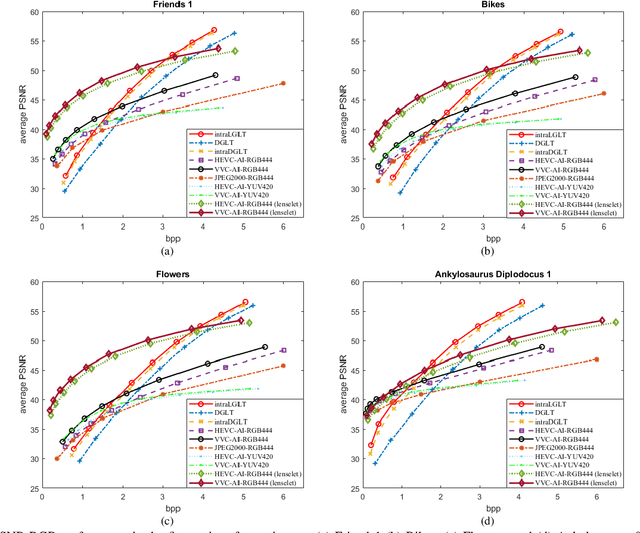

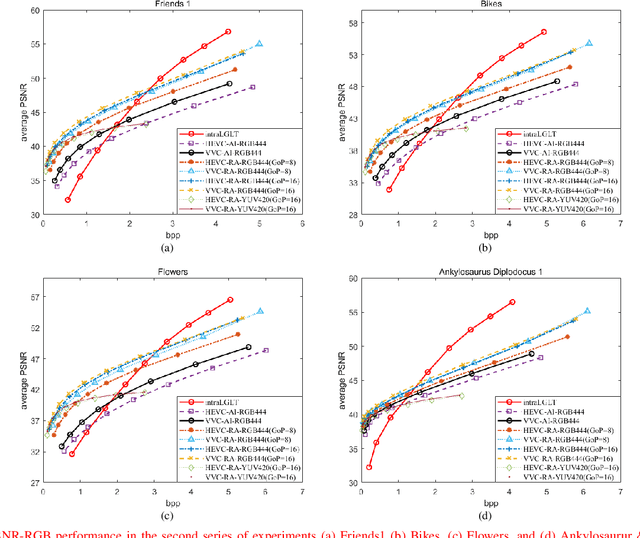

A plenoptic light field (LF) camera places an array of microlenses in front of an image sensor in order to separately capture different directional rays arriving at an image pixel. Using a conventional Bayer pattern, data captured at each pixel is a single color component (R, G or B). The sensed data then undergoes demosaicking (interpolation of RGB components per pixel) and conversion to an array of sub-aperture images (SAIs). In this paper, we propose a new LF image coding scheme based on graph lifting transform (GLT), where the acquired sensor data are coded in the original captured form without pre-processing. Specifically, we directly map raw sensed color data to the SAIs, resulting in sparsely distributed color pixels on 2D grids, and perform demosaicking at the receiver after decoding. To exploit spatial correlation among the sparse pixels, we propose a novel intra-prediction scheme, where the prediction kernel is determined according to the local gradient estimated from already coded neighboring pixel blocks. We then connect the pixels by forming a graph, modeling the prediction residuals statistically as a Gaussian Markov Random Field (GMRF). The optimal edge weights are computed via a graph learning method using a set of training SAIs. The residual data is encoded via low-complexity GLT. Experiments show that at high PSNRs -- important for archiving and instant storage scenarios -- our method outperformed significantly a conventional light field image coding scheme with demosaicking followed by High Efficiency Video Coding (HEVC).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge