Plug-and-Play Controllable Generation for Discrete Masked Models

Paper and Code

Oct 03, 2024

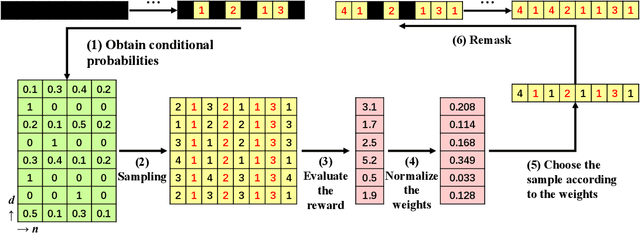

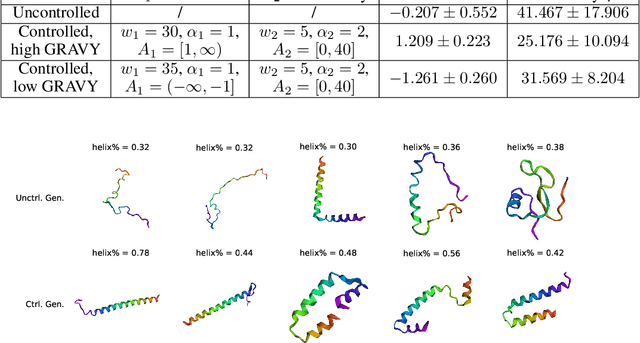

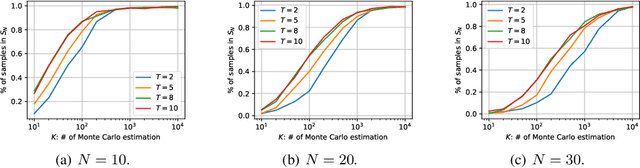

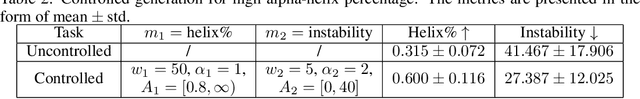

This article makes discrete masked models for the generative modeling of discrete data controllable. The goal is to generate samples of a discrete random variable that adheres to a posterior distribution, satisfies specific constraints, or optimizes a reward function. This methodological development enables broad applications across downstream tasks such as class-specific image generation and protein design. Existing approaches for controllable generation of masked models typically rely on task-specific fine-tuning or additional modifications, which can be inefficient and resource-intensive. To overcome these limitations, we propose a novel plug-and-play framework based on importance sampling that bypasses the need for training a conditional score. Our framework is agnostic to the choice of control criteria, requires no gradient information, and is well-suited for tasks such as posterior sampling, Bayesian inverse problems, and constrained generation. We demonstrate the effectiveness of our approach through extensive experiments, showcasing its versatility across multiple domains, including protein design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge