PL-Net: Progressive Learning Network for Medical Image Segmentation

Paper and Code

Oct 27, 2021

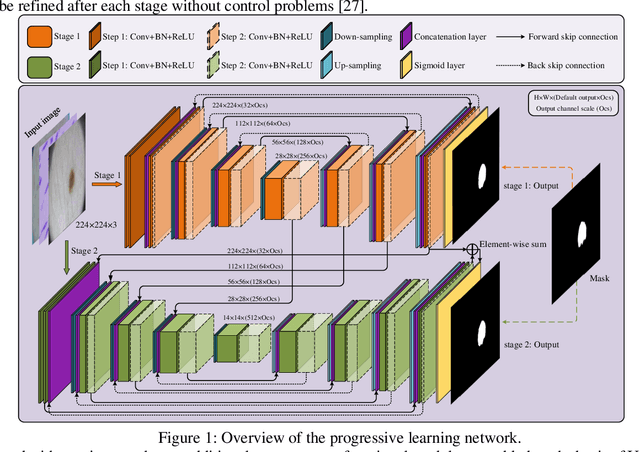

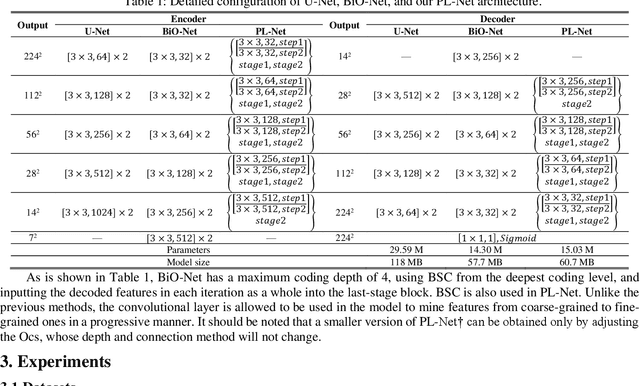

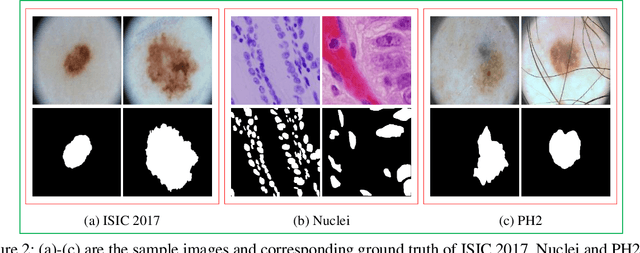

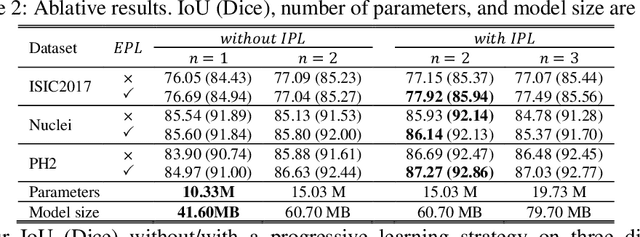

In recent years, segmentation methods based on deep convolutional neural networks (CNNs) have made state-of-the-art achievements for many medical analysis tasks. However, most of these approaches improve performance by optimizing the structure or adding new functional modules of the U-Net, which ignoring the complementation and fusion of the coarse-grained and fine-grained semantic information. To solve the above problems, we propose a medical image segmentation framework called progressive learning network (PL-Net), which includes internal progressive learning (IPL) and external progressive learning (EPL). PL-Net has the following advantages: (1) IPL divides feature extraction into two "steps", which can mix different size receptive fields and capture semantic information from coarse to fine granularity without introducing additional parameters; (2) EPL divides the training process into two "stages" to optimize parameters, and realizes the fusion of coarse-grained information in the previous stage and fine-grained information in the latter stage. We evaluate our method in different medical image analysis tasks, and the results show that the segmentation performance of PL-Net is better than the state-of-the-art methods of U-Net and its variants.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge