Pisces: Efficient Federated Learning via Guided Asynchronous Training

Paper and Code

Jun 18, 2022

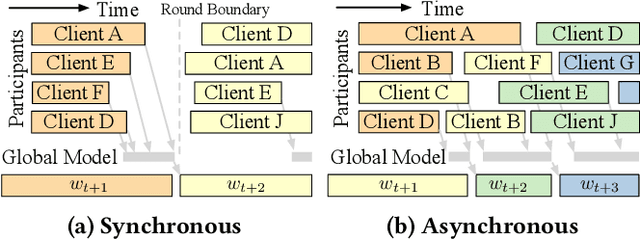

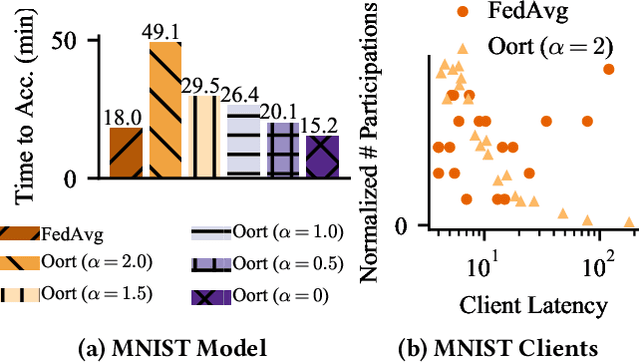

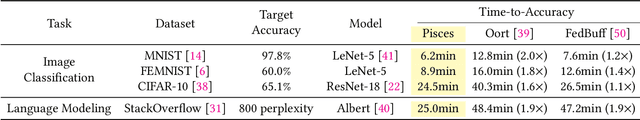

Federated learning (FL) is typically performed in a synchronous parallel manner, where the involvement of a slow client delays a training iteration. Current FL systems employ a participant selection strategy to select fast clients with quality data in each iteration. However, this is not always possible in practice, and the selection strategy often has to navigate an unpleasant trade-off between the speed and the data quality of clients. In this paper, we present Pisces, an asynchronous FL system with intelligent participant selection and model aggregation for accelerated training. To avoid incurring excessive resource cost and stale training computation, Pisces uses a novel scoring mechanism to identify suitable clients to participate in a training iteration. It also adapts the pace of model aggregation to dynamically bound the progress gap between the selected clients and the server, with a provable convergence guarantee in a smooth non-convex setting. We have implemented Pisces in an open-source FL platform called Plato, and evaluated its performance in large-scale experiments with popular vision and language models. Pisces outperforms the state-of-the-art synchronous and asynchronous schemes, accelerating the time-to-accuracy by up to 2.0x and 1.9x, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge