Pervasive Machine Learning for Smart Radio Environments Enabled by Reconfigurable Intelligent Surfaces

Paper and Code

May 08, 2022

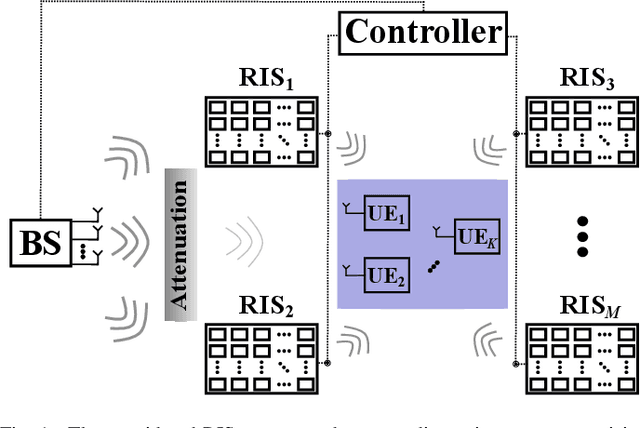

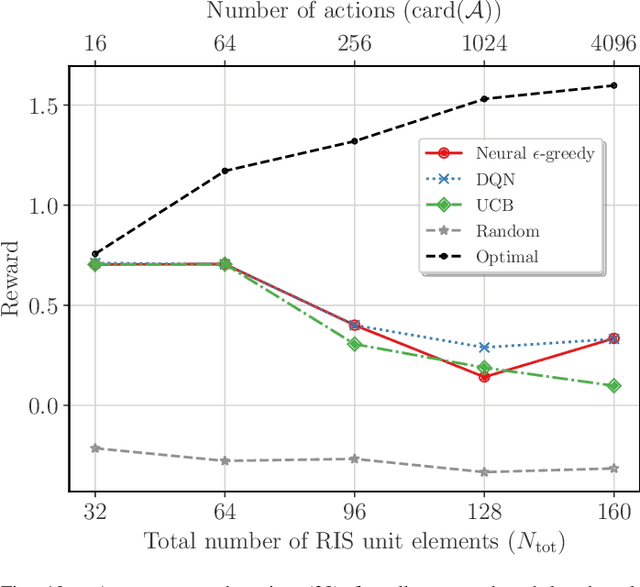

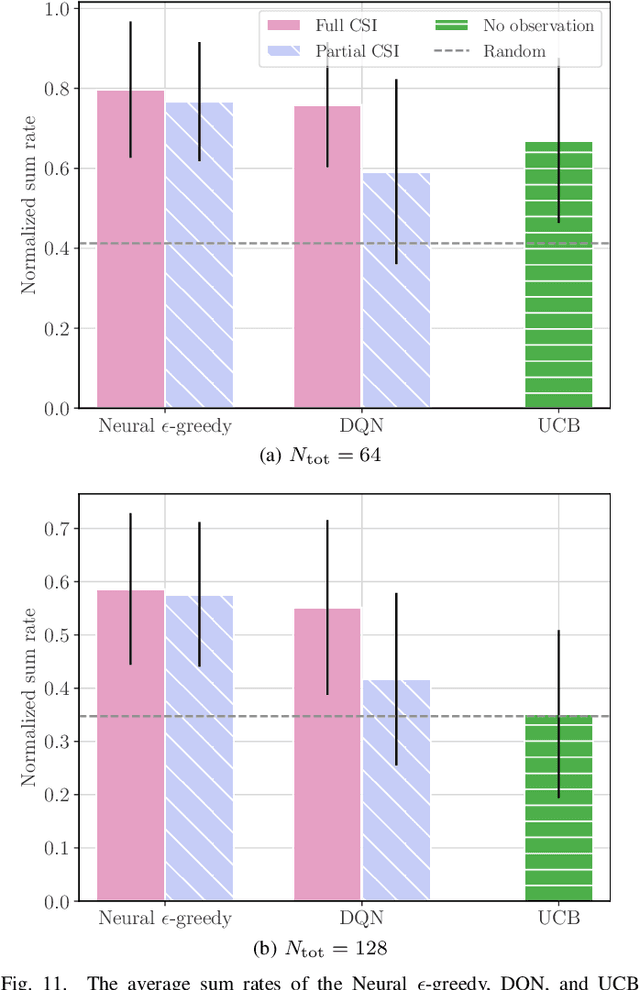

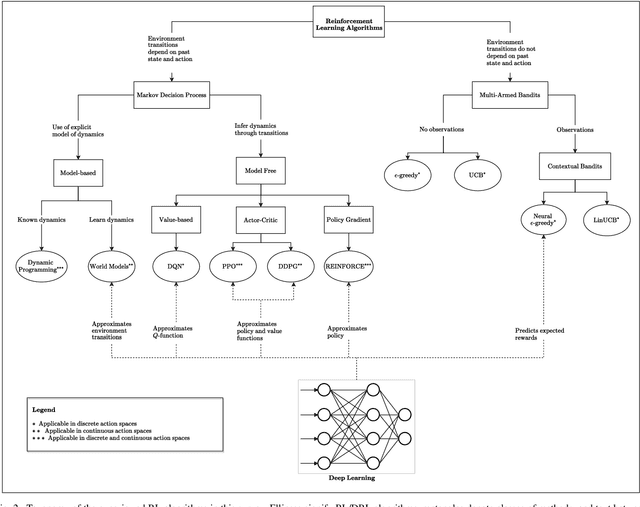

The emerging technology of Reconfigurable Intelligent Surfaces (RISs) is provisioned as an enabler of smart wireless environments, offering a highly scalable, low-cost, hardware-efficient, and almost energy-neutral solution for dynamic control of the propagation of electromagnetic signals over the wireless medium, ultimately providing increased environmental intelligence for diverse operation objectives. One of the major challenges with the envisioned dense deployment of RISs in such reconfigurable radio environments is the efficient configuration of multiple metasurfaces with limited, or even the absence of, computing hardware. In this paper, we consider multi-user and multi-RIS-empowered wireless systems, and present a thorough survey of the online machine learning approaches for the orchestration of their various tunable components. Focusing on the sum-rate maximization as a representative design objective, we present a comprehensive problem formulation based on Deep Reinforcement Learning (DRL). We detail the correspondences among the parameters of the wireless system and the DRL terminology, and devise generic algorithmic steps for the artificial neural network training and deployment, while discussing their implementation details. Further practical considerations for multi-RIS-empowered wireless communications in the sixth Generation (6G) era are presented along with some key open research challenges. Differently from the DRL-based status quo, we leverage the independence between the configuration of the system design parameters and the future states of the wireless environment, and present efficient multi-armed bandits approaches, whose resulting sum-rate performances are numerically shown to outperform random configurations, while being sufficiently close to the conventional Deep Q-Network (DQN) algorithm, but with lower implementation complexity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge