PC-GANs: Progressive Compensation Generative Adversarial Networks for Pan-sharpening

Paper and Code

Jul 29, 2022

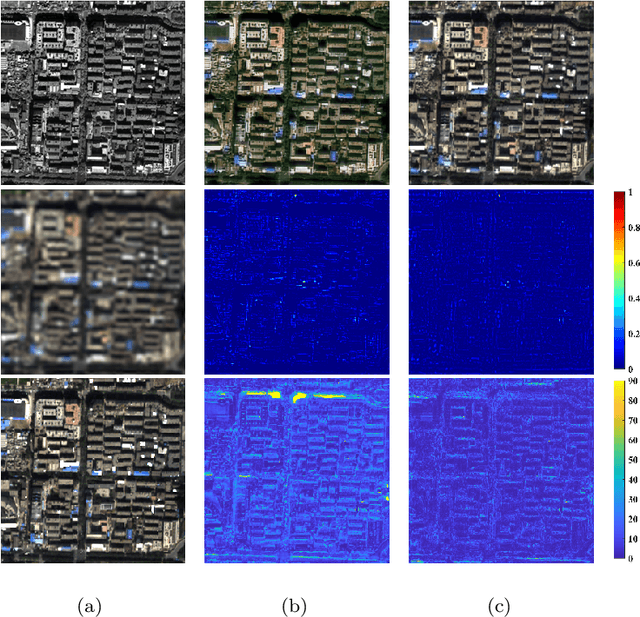

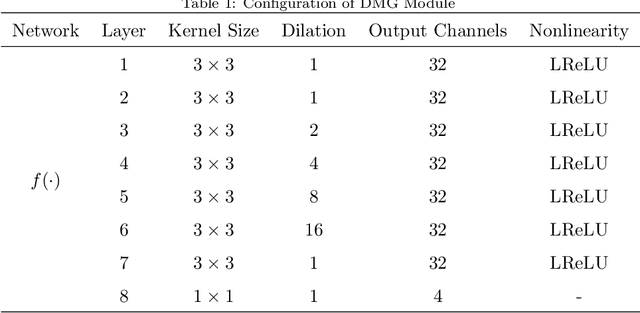

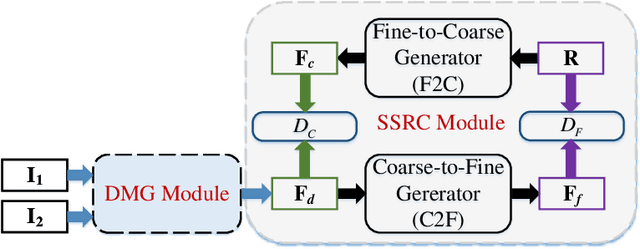

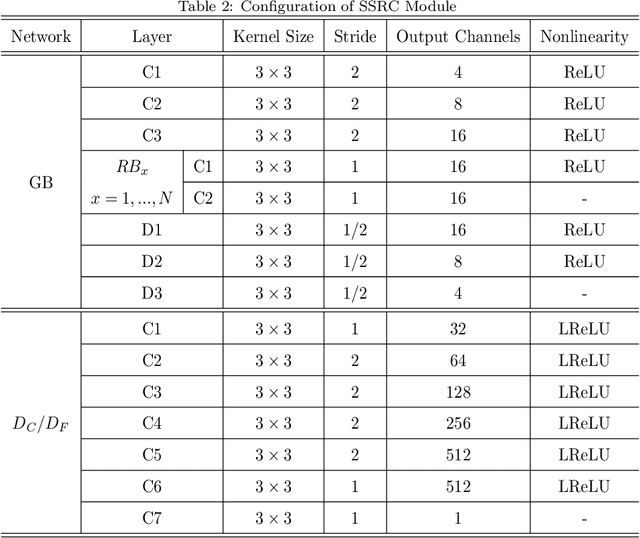

The fusion of multispectral and panchromatic images is always dubbed pansharpening. Most of the available deep learning-based pan-sharpening methods sharpen the multispectral images through a one-step scheme, which strongly depends on the reconstruction ability of the network. However, remote sensing images always have large variations, as a result, these one-step methods are vulnerable to the error accumulation and thus incapable of preserving spatial details as well as the spectral information. In this paper, we propose a novel two-step model for pan-sharpening that sharpens the MS image through the progressive compensation of the spatial and spectral information. Firstly, a deep multiscale guided generative adversarial network is used to preliminarily enhance the spatial resolution of the MS image. Starting from the pre-sharpened MS image in the coarse domain, our approach then progressively refines the spatial and spectral residuals over a couple of generative adversarial networks (GANs) that have reverse architectures. The whole model is composed of triple GANs, and based on the specific architecture, a joint compensation loss function is designed to enable the triple GANs to be trained simultaneously. Moreover, the spatial-spectral residual compensation structure proposed in this paper can be extended to other pan-sharpening methods to further enhance their fusion results. Extensive experiments are performed on different datasets and the results demonstrate the effectiveness and efficiency of our proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge