Online Multiple Pedestrian Tracking using Deep Temporal Appearance Matching Association

Paper and Code

Jul 01, 2019

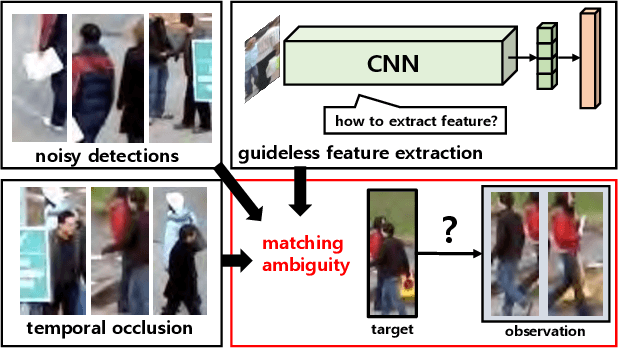

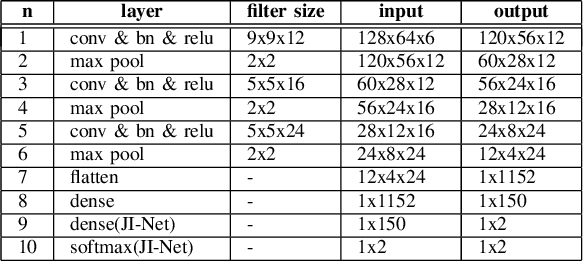

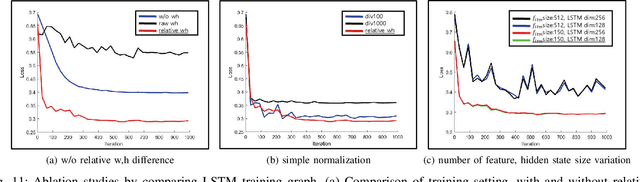

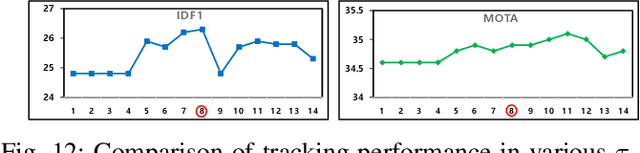

In online multiple pedestrian tracking it is of great importance to construct reliable cost matrix for assigning observations to tracks. Each element of cost matrix is constructed by using similarity measure. Many previous works have proposed their own similarity calculation methods consisting of geometric model (e.g. bounding box coordinates) and appearance model. In particular, appearance model contains information with higher dimension compared to geometric model. Thanks to the recent success of deep learning based methods, handling of high dimensional appearance information becomes possible. Among many deep networks, a siamese network with triplet loss is popularly adopted as an appearance feature extractor. Since the siamese network can extract features of each input independently, it is possible to adaptively model tracks (e.g. linear update). However, it is not suitable for multi-object setting that requires comparison with other inputs. In this paper we propose a novel track appearance modeling based on joint inference network to address this issue. The proposed method enables comparison of two inputs to be used for adaptive appearance modeling. It contributes to disambiguating target-observation matching and consolidating the identity consistency. Intensive experimental results support effectiveness of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge