On the use of Deep Autoencoders for Efficient Embedded Reinforcement Learning

Paper and Code

Mar 25, 2019

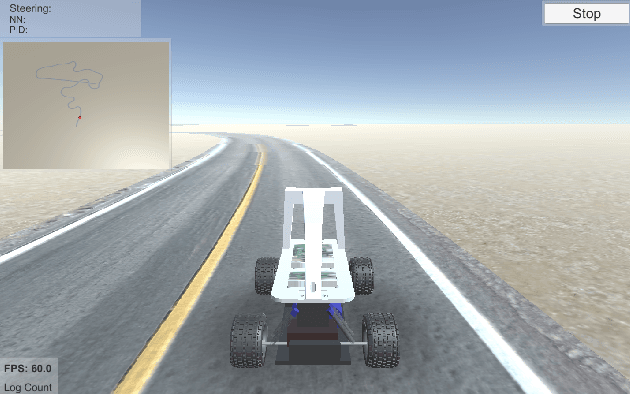

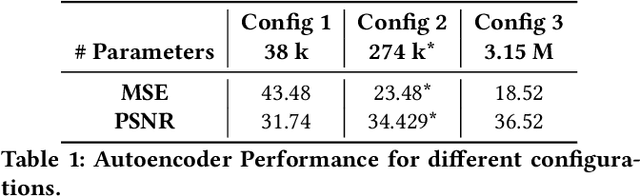

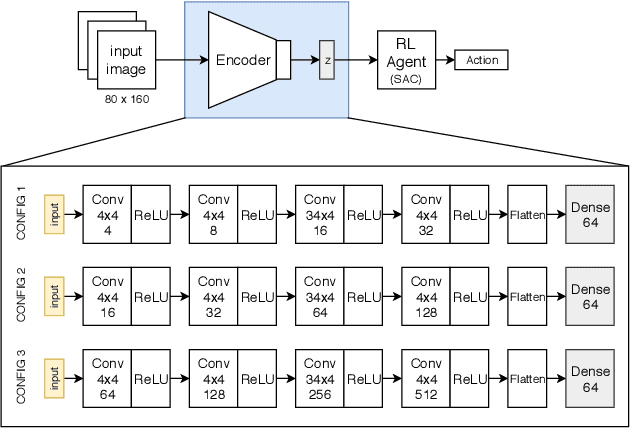

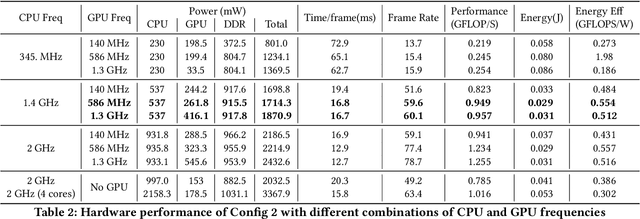

In autonomous embedded systems, it is often vital to reduce the amount of actions taken in the real world and energy required to learn a policy. Training reinforcement learning agents from high dimensional image representations can be very expensive and time consuming. Autoencoders are deep neural network used to compress high dimensional data such as pixelated images into small latent representations. This compression model is vital to efficiently learn policies, especially when learning on embedded systems. We have implemented this model on the NVIDIA Jetson TX2 embedded GPU, and evaluated the power consumption, throughput, and energy consumption of the autoencoders for various CPU/GPU core combinations, frequencies, and model parameters. Additionally, we have shown the reconstructions generated by the autoencoder to analyze the quality of the generated compressed representation and also the performance of the reinforcement learning agent. Finally, we have presented an assessment of the viability of training these models on embedded systems and their usefulness in developing autonomous policies. Using autoencoders, we were able to achieve 4-5 $\times$ improved performance compared to a baseline RL agent with a convolutional feature extractor, while using less than 2W of power.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge