On the Equity of Nuclear Norm Maximization in Unsupervised Domain Adaptation

Paper and Code

Apr 12, 2022

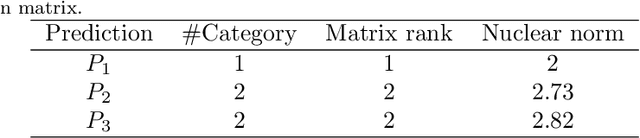

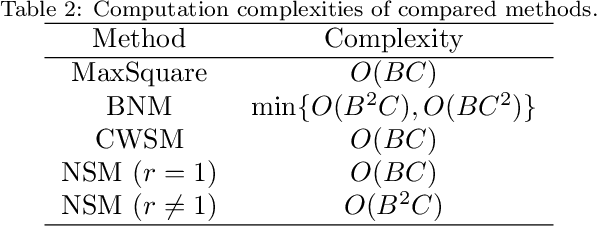

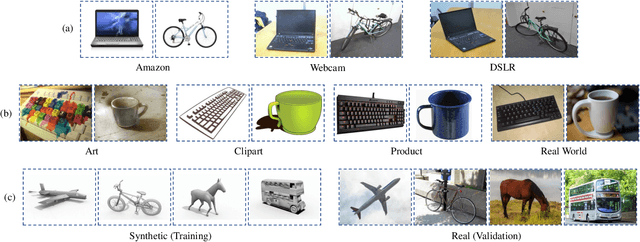

Nuclear norm maximization has shown the power to enhance the transferability of unsupervised domain adaptation model (UDA) in an empirical scheme. In this paper, we identify a new property termed equity, which indicates the balance degree of predicted classes, to demystify the efficacy of nuclear norm maximization for UDA theoretically. With this in mind, we offer a new discriminability-and-equity maximization paradigm built on squares loss, such that predictions are equalized explicitly. To verify its feasibility and flexibility, two new losses termed Class Weighted Squares Maximization (CWSM) and Normalized Squares Maximization (NSM), are proposed to maximize both predictive discriminability and equity, from the class level and the sample level, respectively. Importantly, we theoretically relate these two novel losses (i.e., CWSM and NSM) to the equity maximization under mild conditions, and empirically suggest the importance of the predictive equity in UDA. Moreover, it is very efficient to realize the equity constraints in both losses. Experiments of cross-domain image classification on three popular benchmark datasets show that both CWSM and NSM contribute to outperforming the corresponding counterparts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge