On Jointly Optimizing Partial Offloading and SFC Mapping: A Cooperative Dual-agent Deep Reinforcement Learning Approach

Paper and Code

May 20, 2022

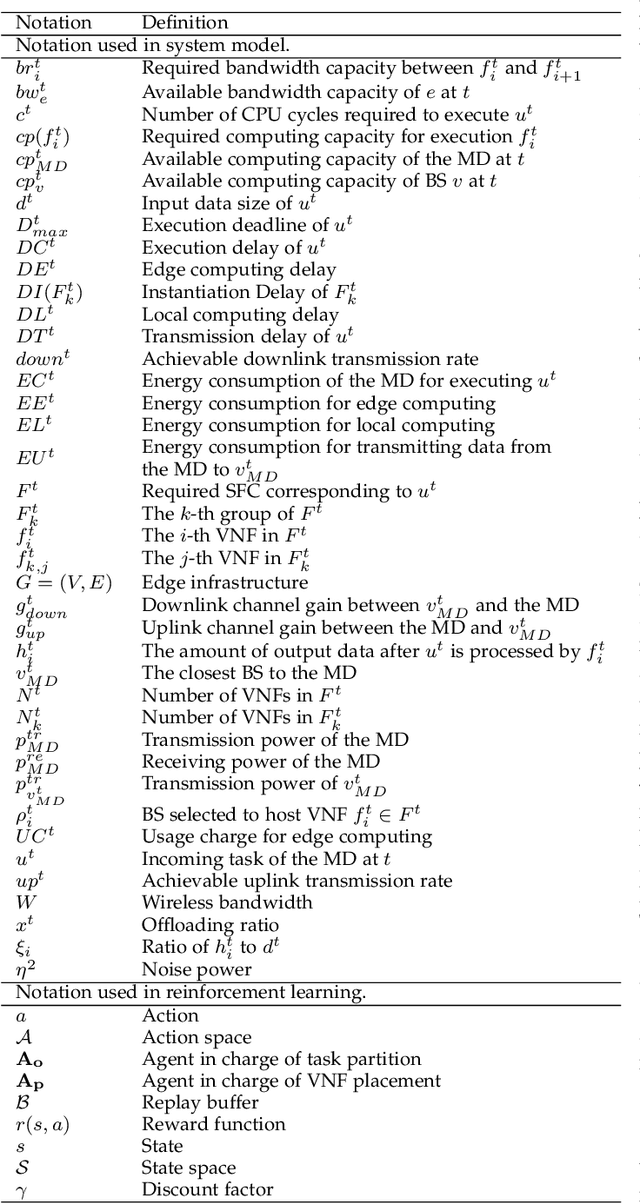

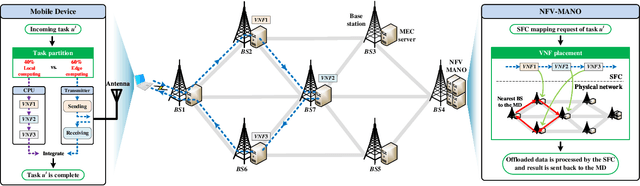

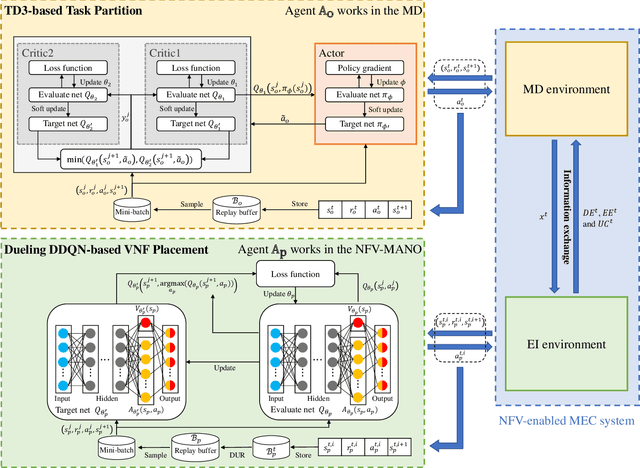

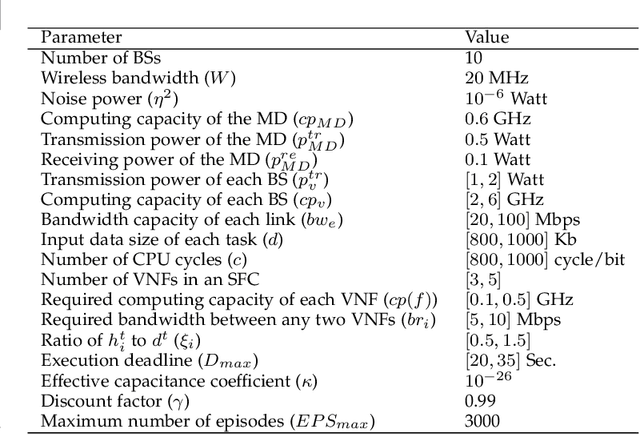

Multi-access edge computing (MEC) and network function virtualization (NFV) are promising technologies to support emerging IoT applications, especially those computation-intensive. In NFV-enabled MEC environment, service function chain (SFC), i.e., a set of ordered virtual network functions (VNFs), can be mapped on MEC servers. Mobile devices (MDs) can offload computation-intensive applications, which can be represented by SFCs, fully or partially to MEC servers for remote execution. This paper studies the partial offloading and SFC mapping joint optimization (POSMJO) problem in an NFV-enabled MEC system, where an incoming task can be partitioned into two parts, one for local execution and the other for remote execution. The objective is to minimize the average cost in the long term which is a combination of execution delay, MD's energy consumption, and usage charge for edge computing. This problem consists of two closely related decision-making steps, namely task partition and VNF placement, which is highly complex and quite challenging. To address this, we propose a cooperative dual-agent deep reinforcement learning (CDADRL) algorithm, where we design a framework enabling interaction between two agents. Simulation results show that the proposed algorithm outperforms three combinations of deep reinforcement learning algorithms in terms of cumulative and average episodic rewards and it overweighs a number of baseline algorithms with respect to execution delay, energy consumption, and usage charge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge