On Exploring Pose Estimation as an Auxiliary Learning Task for Visible-Infrared Person Re-identification

Paper and Code

Jan 11, 2022

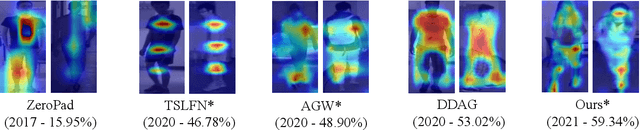

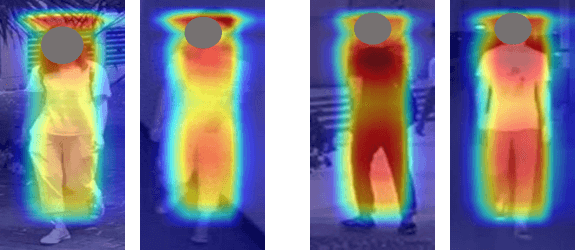

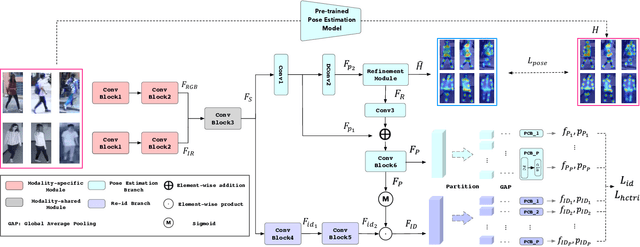

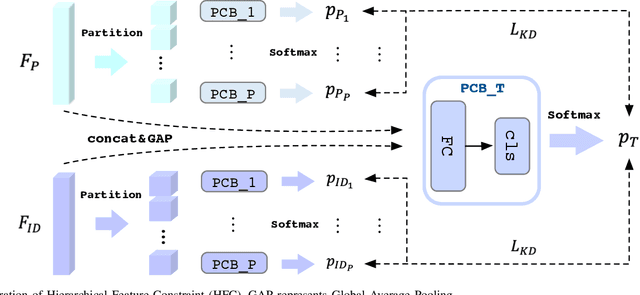

Visible-infrared person re-identification (VI-ReID) has been challenging due to the existence of large discrepancies between visible and infrared modalities. Most pioneering approaches reduce intra-class variations and inter-modality discrepancies by learning modality-shared and ID-related features. However, an explicit modality-shared cue, i.e., body keypoints, has not been fully exploited in VI-ReID. Additionally, existing feature learning paradigms imposed constraints on either global features or partitioned feature stripes, which neglect the prediction consistency of global and part features. To address the above problems, we exploit Pose Estimation as an auxiliary learning task to assist the VI-ReID task in an end-to-end framework. By jointly training these two tasks in a mutually beneficial manner, our model learns higher quality modality-shared and ID-related features. On top of it, the learnings of global features and local features are seamlessly synchronized by Hierarchical Feature Constraint (HFC), where the former supervises the latter using the knowledge distillation strategy. Experimental results on two benchmark VI-ReID datasets show that the proposed method consistently improves state-of-the-art methods by significant margins. Specifically, our method achieves nearly 20$\%$ mAP improvements against the state-of-the-art method on the RegDB dataset. Our intriguing findings highlight the usage of auxiliary task learning in VI-ReID.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge