OMNet: Learning Overlapping Mask for Partial-to-Partial Point Cloud Registration

Paper and Code

Mar 03, 2021

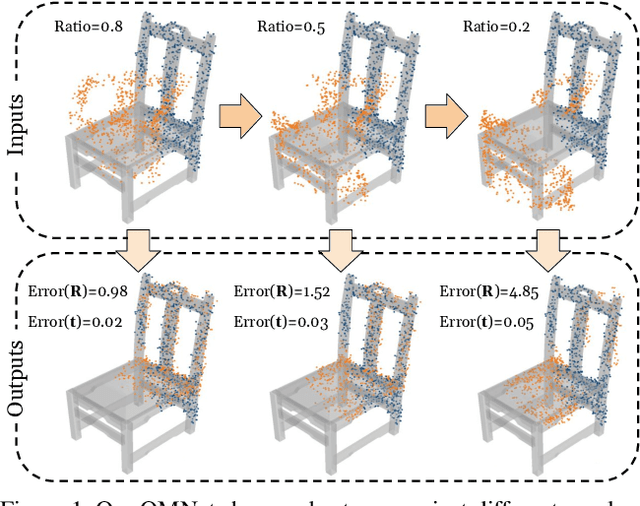

Point cloud registration is a key task in many computational fields. Previous correspondence matching based methods require the point clouds to have distinctive geometric structures to fit a 3D rigid transformation according to point-wise sparse feature matches. However, the accuracy of transformation heavily relies on the quality of extracted features, which are prone to errors with respect partiality and noise of the inputs. In addition, they can not utilize the geometric knowledge of all regions. On the other hand, previous global feature based deep learning approaches can utilize the entire point cloud for the registration, however they ignore the negative effect of non-overlapping points when aggregating global feature from point-wise features. In this paper, we present OMNet, a global feature based iterative network for partial-to-partial point cloud registration. We learn masks in a coarse-to-fine manner to reject non-overlapping regions, which converting the partial-to-partial registration to the registration of the same shapes. Moreover, the data used in previous works are only sampled once from CAD models for each object, resulting the same point cloud for the source and the reference. We propose a more practical manner for data generation, where a CAD model is sampled twice for the source and the reference point clouds, avoiding over-fitting issues that commonly exist previously. Experimental results show that our approach achieves state-of-the-art performance compared to traditional and deep learning methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge