Object Finding in Cluttered Scenes Using Interactive Perception

Paper and Code

Nov 18, 2019

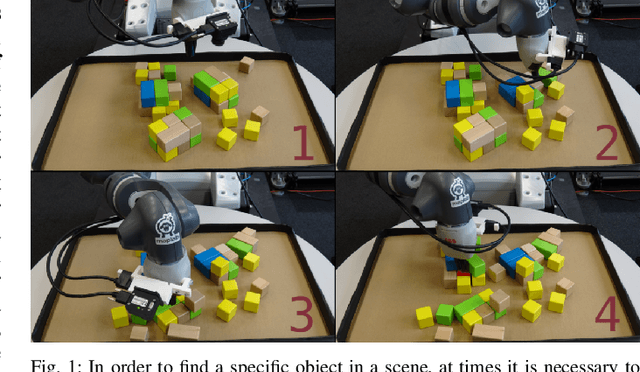

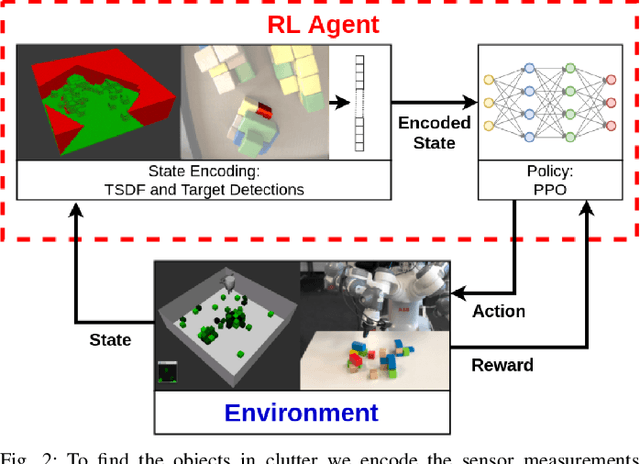

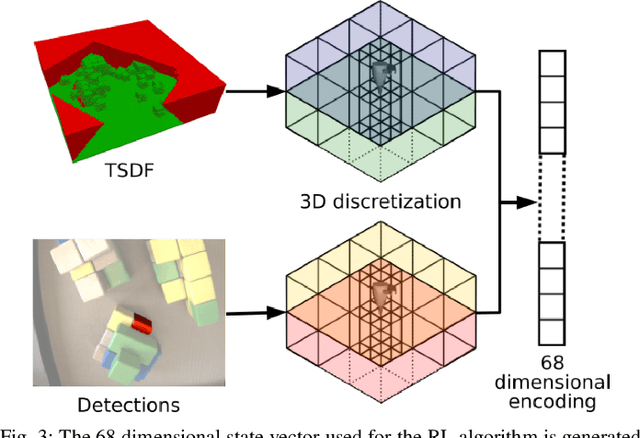

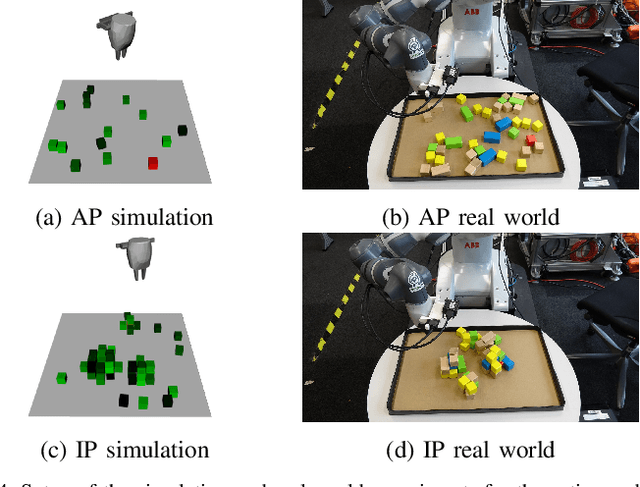

Object finding in clutter is a skill that requires both perception of the environment and in many cases physical interaction. In robotics, interactive perception defines a set of algorithms that leverage actions to improve the perception of the environment, and vice versa use perception to guide the next action. Scene interactions are difficult to model, therefore, most of the current systems use predefined heuristics. This limits their ability to efficiently search for the target object in a complex environment. In order to remove heuristics and the need for explicit models of the interactions, in this work we propose a reinforcement learning based active and interactive perception system for scene exploration and object search. We evaluate our work both in simulated and in real world experiments using a robotic manipulator equipped with an RGB and a depth camera, and compared our system to two baselines. The results indicate that our approach, trained in simulation only, transfers smoothly to reality and can solve the object finding task efficiently and with more than 90% success rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge