Not All Neural Embeddings are Born Equal

Paper and Code

Nov 13, 2014

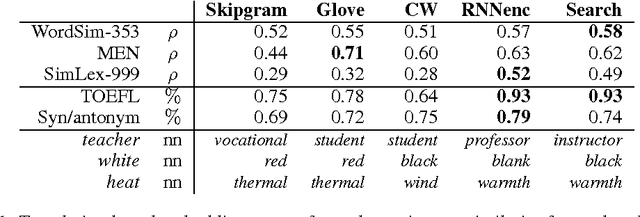

Neural language models learn word representations that capture rich linguistic and conceptual information. Here we investigate the embeddings learned by neural machine translation models. We show that translation-based embeddings outperform those learned by cutting-edge monolingual models at single-language tasks requiring knowledge of conceptual similarity and/or syntactic role. The findings suggest that, while monolingual models learn information about how concepts are related, neural-translation models better capture their true ontological status.

* 4 pages plus 1 page of references

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge