Non-Autoregressive Neural Dialogue Generation

Paper and Code

Feb 13, 2020

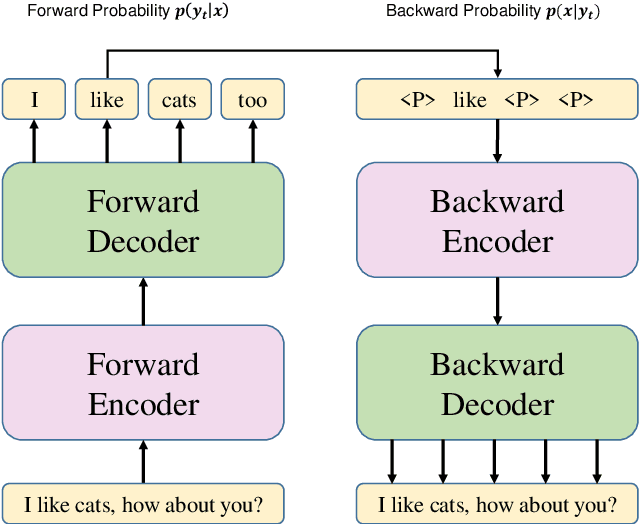

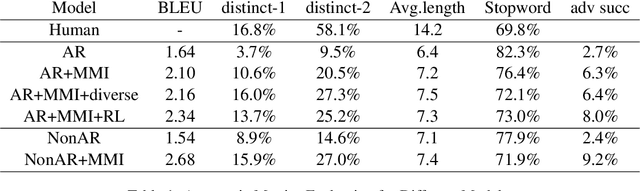

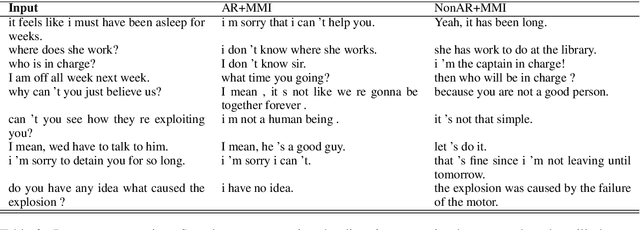

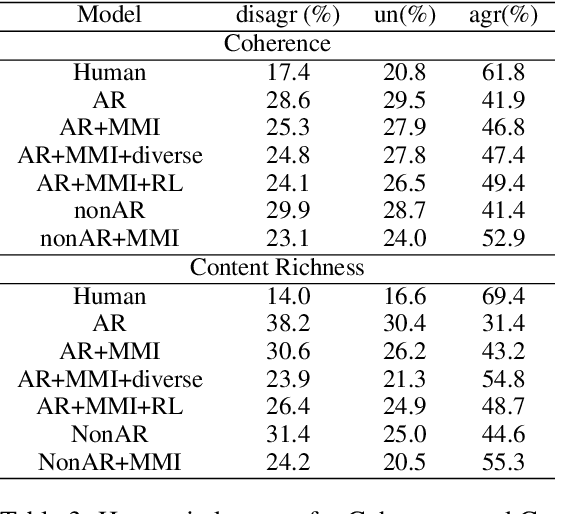

Maximum Mutual information (MMI), which models the bidirectional dependency between responses ($y$) and contexts ($x$), i.e., the forward probability $\log p(y|x)$ and the backward probability $\log p(x|y)$, has been widely used as the objective in the \sts model to address the dull-response issue in open-domain dialog generation. Unfortunately, under the framework of the \sts model, direct decoding from $\log p(y|x) + \log p(x|y)$ is infeasible since the second part (i.e., $p(x|y)$) requires the completion of target generation before it can be computed, and the search space for $y$ is enormous. Empirically, an N-best list is first generated given $p(y|x)$, and $p(x|y)$ is then used to rerank the N-best list, which inevitably results in non-globally-optimal solutions. In this paper, we propose to use non-autoregressive (non-AR) generation model to address this non-global optimality issue. Since target tokens are generated independently in non-AR generation, $p(x|y)$ for each target word can be computed as soon as it's generated, and does not have to wait for the completion of the whole sequence. This naturally resolves the non-global optimal issue in decoding. Experimental results demonstrate that the proposed non-AR strategy produces more diverse, coherent, and appropriate responses, yielding substantive gains in BLEU scores and in human evaluations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge