NG+ : A Multi-Step Matrix-Product Natural Gradient Method for Deep Learning

Paper and Code

Jun 14, 2021

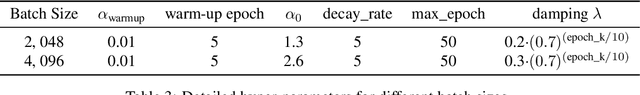

In this paper, a novel second-order method called NG+ is proposed. By following the rule ``the shape of the gradient equals the shape of the parameter", we define a generalized fisher information matrix (GFIM) using the products of gradients in the matrix form rather than the traditional vectorization. Then, our generalized natural gradient direction is simply the inverse of the GFIM multiplies the gradient in the matrix form. Moreover, the GFIM and its inverse keeps the same for multiple steps so that the computational cost can be controlled and is comparable with the first-order methods. A global convergence is established under some mild conditions and a regret bound is also given for the online learning setting. Numerical results on image classification with ResNet50, quantum chemistry modeling with Schnet, neural machine translation with Transformer and recommendation system with DLRM illustrate that GN+ is competitive with the state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge