Neural Contextual Anomaly Detection for Time Series

Paper and Code

Jul 16, 2021

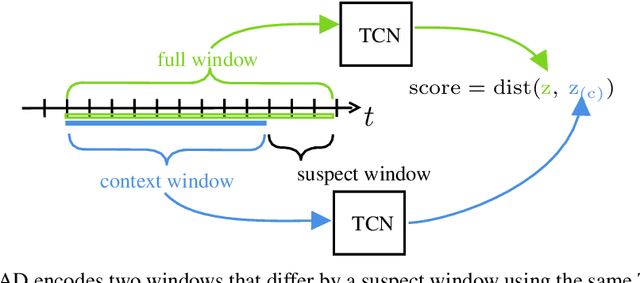

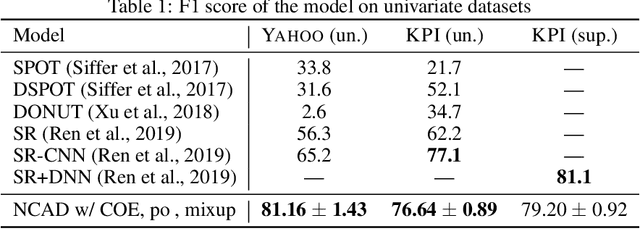

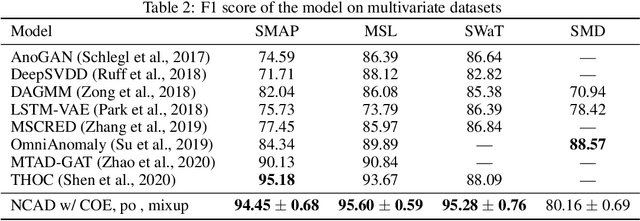

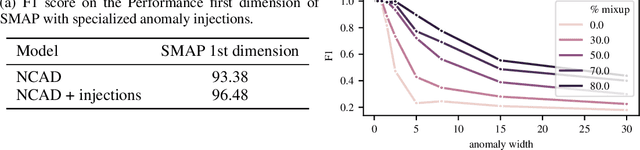

We introduce Neural Contextual Anomaly Detection (NCAD), a framework for anomaly detection on time series that scales seamlessly from the unsupervised to supervised setting, and is applicable to both univariate and multivariate time series. This is achieved by effectively combining recent developments in representation learning for multivariate time series, with techniques for deep anomaly detection originally developed for computer vision that we tailor to the time series setting. Our window-based approach facilitates learning the boundary between normal and anomalous classes by injecting generic synthetic anomalies into the available data. Moreover, our method can effectively take advantage of all the available information, be it as domain knowledge, or as training labels in the semi-supervised setting. We demonstrate empirically on standard benchmark datasets that our approach obtains a state-of-the-art performance in these settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge