Multiplicative updates For Non-Negative Kernel SVM

Paper and Code

Feb 24, 2009

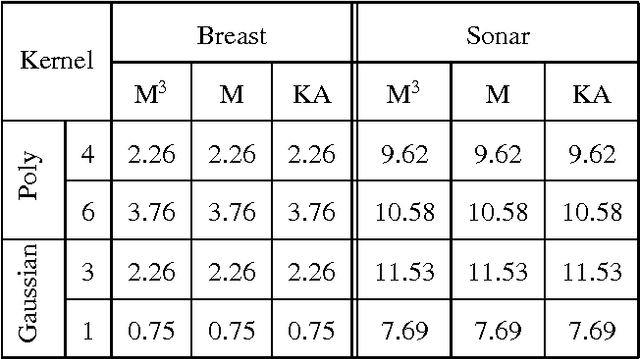

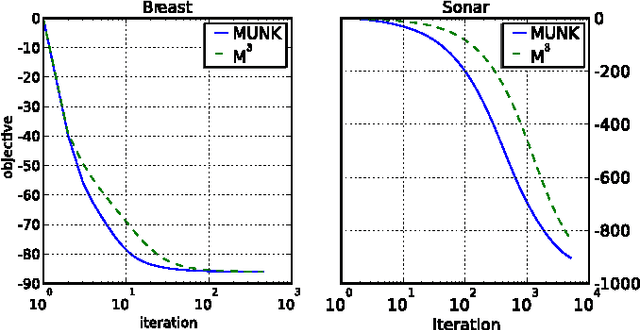

We present multiplicative updates for solving hard and soft margin support vector machines (SVM) with non-negative kernels. They follow as a natural extension of the updates for non-negative matrix factorization. No additional param- eter setting, such as choosing learning, rate is required. Ex- periments demonstrate rapid convergence to good classifiers. We analyze the rates of asymptotic convergence of the up- dates and establish tight bounds. We test the performance on several datasets using various non-negative kernels and report equivalent generalization errors to that of a standard SVM.

* 4 pages, 1 figure, 1 table

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge