Multi-Task Deep Networks for Depth-Based 6D Object Pose and Joint Registration in Crowd Scenarios

Paper and Code

Jun 11, 2018

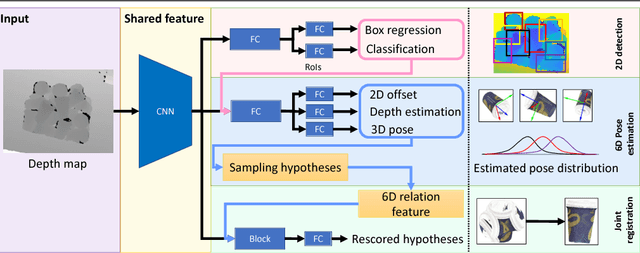

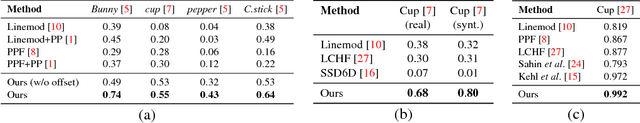

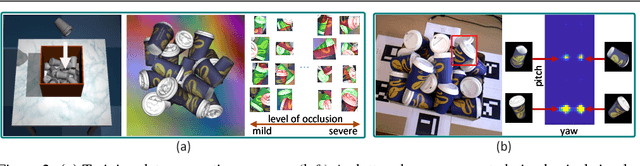

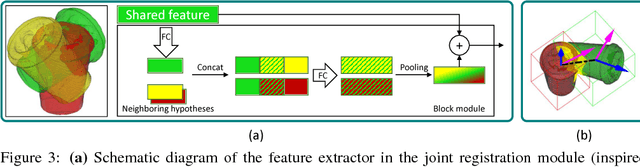

In bin-picking scenarios, multiple instances of an object of interest are stacked in a pile randomly, and hence, the instances are inherently subjected to the challenges: severe occlusion, clutter, and similar-looking distractors. Most existing methods are, however, for single isolated object instances, while some recent methods tackle crowd scenarios as post-refinement which accounts multiple object relations. In this paper, we address recovering 6D poses of multiple instances in bin-picking scenarios in depth modality by multi-task learning in deep neural networks. Our architecture jointly learns multiple sub-tasks: 2D detection, depth, and 3D pose estimation of individual objects; and joint registration of multiple objects. For training data generation, depth images of physically plausible object pose configurations are generated by a 3D object model in a physics simulation, which yields diverse occlusion patterns to learn. We adopt a state-of-the-art object detector, and 2D offsets are further estimated via a network to refine misaligned 2D detections. The depth and 3D pose estimator is designed to generate multiple hypotheses per detection. This allows the joint registration network to learn occlusion patterns and remove physically implausible pose hypotheses. We apply our architecture on both synthetic (our own and Sileane dataset) and real (a public Bin-Picking dataset) data, showing that it significantly outperforms state-of-the-art methods by 15-31% in average precision.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge