Multi-layer VI-GNSS Global Positioning Framework with Numerical Solution aided MAP Initialization

Paper and Code

Jan 05, 2022

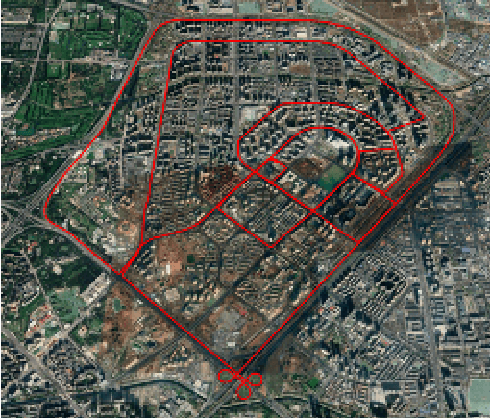

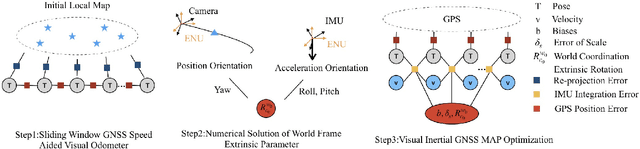

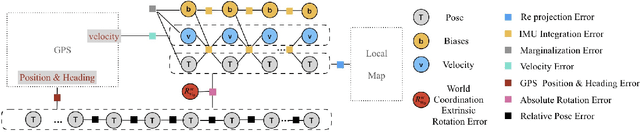

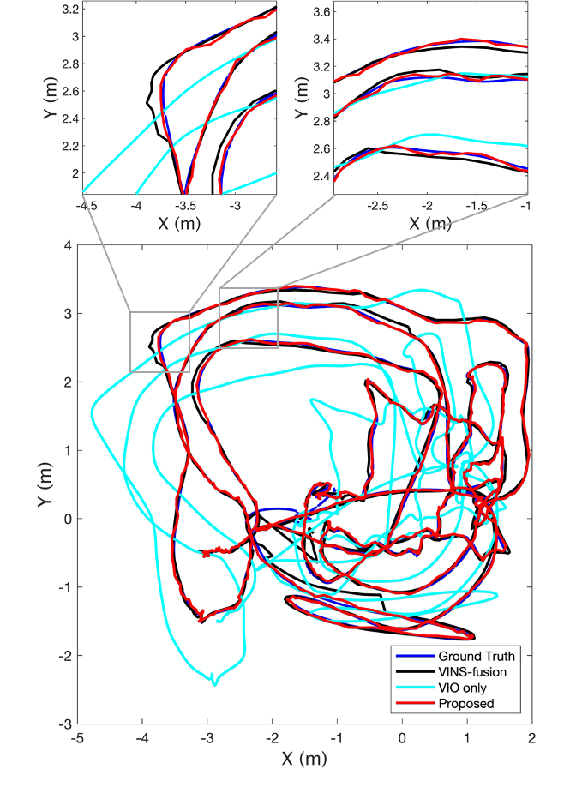

Motivated by the goal of achieving long-term drift-free camera pose estimation in complex scenarios, we propose a global positioning framework fusing visual, inertial and Global Navigation Satellite System (GNSS) measurements in multiple layers. Different from previous loosely- and tightly- coupled methods, the proposed multi-layer fusion allows us to delicately correct the drift of visual odometry and keep reliable positioning while GNSS degrades. In particular, local motion estimation is conducted in the inner-layer, solving the problem of scale drift and inaccurate bias estimation in visual odometry by fusing the velocity of GNSS, pre-integration of Inertial Measurement Unit (IMU) and camera measurement in a tightly-coupled way. The global localization is achieved in the outer-layer, where the local motion is further fused with GNSS position and course in a long-term period in a loosely-coupled way. Furthermore, a dedicated initialization method is proposed to guarantee fast and accurate estimation for all state variables and parameters. We give exhaustive tests of the proposed framework on indoor and outdoor public datasets. The mean localization error is reduced up to 63%, with a promotion of 69% in initialization accuracy compared with state-of-the-art works. We have applied the algorithm to Augmented Reality (AR) navigation, crowd sourcing high-precision map update and other large-scale applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge