Motion Estimation in Occupancy Grid Maps in Stationary Settings Using Recurrent Neural Networks

Paper and Code

Sep 25, 2019

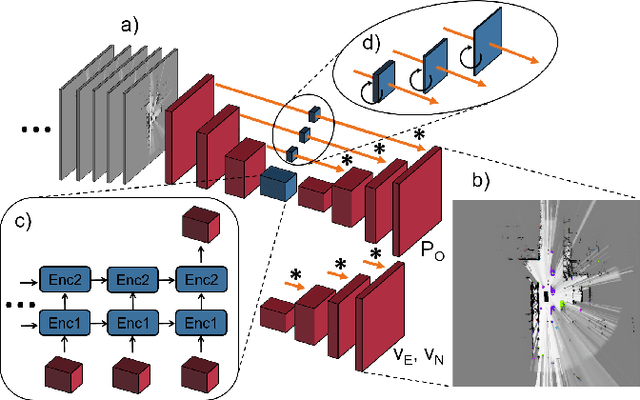

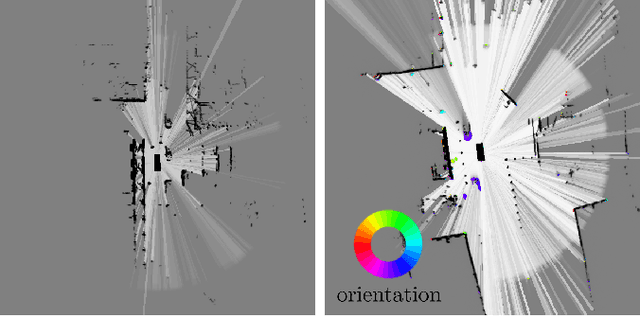

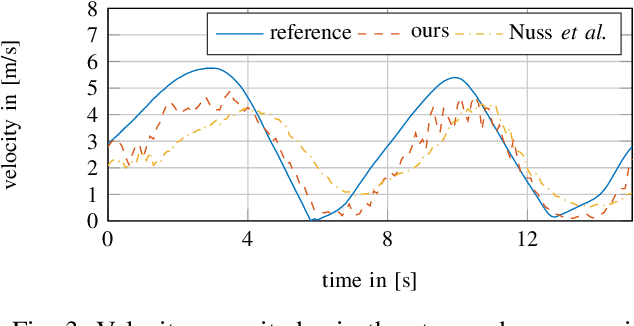

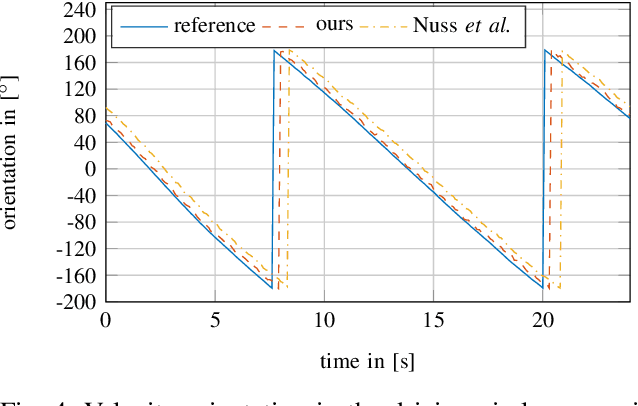

In this work, we tackle the problem of modeling the vehicle environment as dynamic occupancy grid map in complex urban scenarios using recurrent neural networks. Dynamic occupancy grid maps represent the scene in a bird's eye view, where each grid cell contains the occupancy probability and the two dimensional velocity. As input data, our approach relies on measurement grid maps, which contain occupancy probabilities, generated with lidar measurements. Given this configuration, we propose a recurrent neural network architecture to predict a dynamic occupancy grid map, i.e. filtered occupancy and velocity of each cell, by using a sequence of measurement grid maps. Our network architecture contains convolutional long-short term memories in order to sequentially process the input, makes use of spatial context, and captures motion. In the evaluation, we quantify improvements in estimating the velocity of braking and turning vehicles compared to the state-of-the-art. Additionally, we demonstrate that our approach provides more consistent velocity estimates for dynamic objects, as well as, less erroneous velocity estimates in static area.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge