MeliusNet: Can Binary Neural Networks Achieve MobileNet-level Accuracy?

Paper and Code

Jan 16, 2020

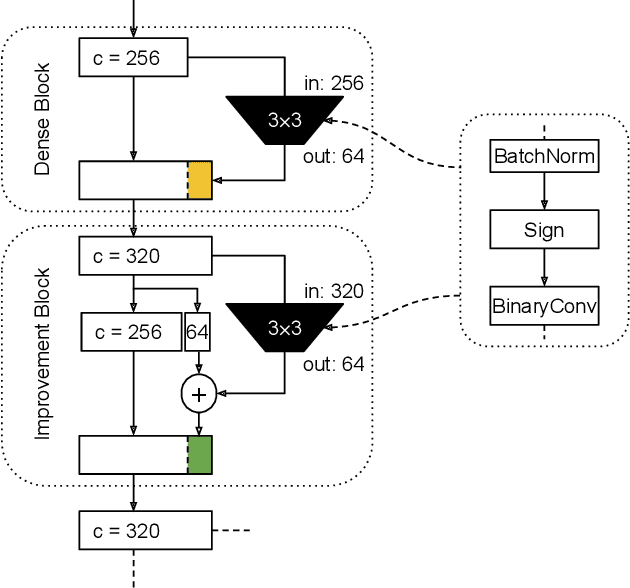

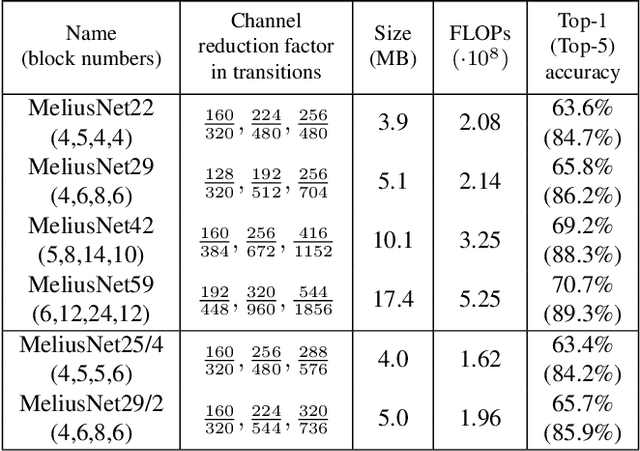

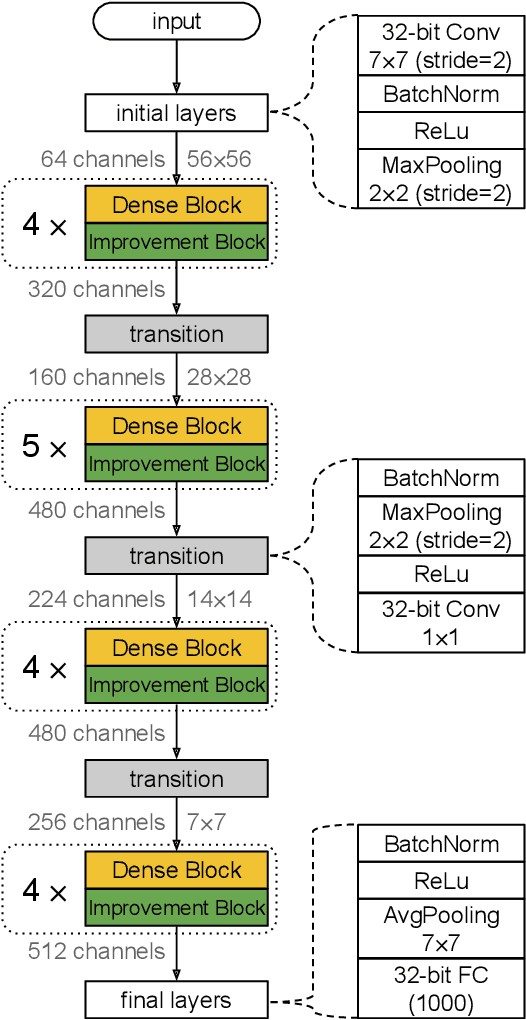

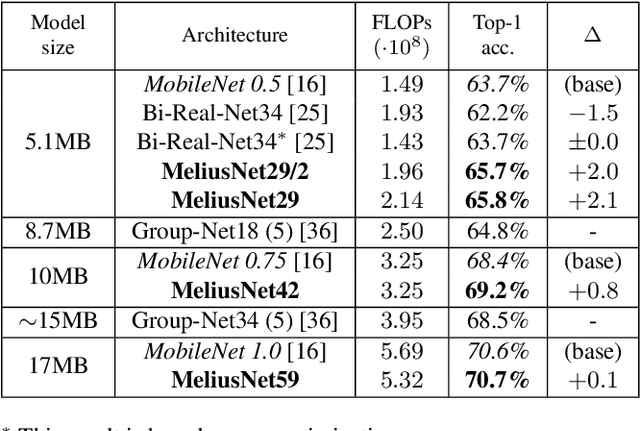

Binary Neural Networks (BNNs) are neural networks which use binary weights and activations instead of the typical 32-bit floating point values. They have reduced model sizes and allow for efficient inference on mobile or embedded devices with limited power and computational resources. However, the binarization of weights and activations leads to feature maps of lower quality and lower capacity and thus a drop in accuracy compared to traditional networks. Previous work has increased the number of channels or used multiple binary bases to alleviate these problems. In this paper, we instead present MeliusNet consisting of alternating two block designs, which consecutively increase the number of features and then improve the quality of these features. In addition, we propose a redesign of those layers that use 32-bit values in previous approaches to reduce the required number of operations. Experiments on the ImageNet dataset demonstrate the superior performance of our MeliusNet over a variety of popular binary architectures with regards to both computation savings and accuracy. Furthermore, with our method we trained BNN models, which for the first time can match the accuracy of the popular compact network MobileNet in terms of model size and accuracy. Our code is published online: https://github.com/hpi-xnor/BMXNet-v2

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge