Maximal Cliques on Multi-Frame Proposal Graph for Unsupervised Video Object Segmentation

Paper and Code

Jan 29, 2023

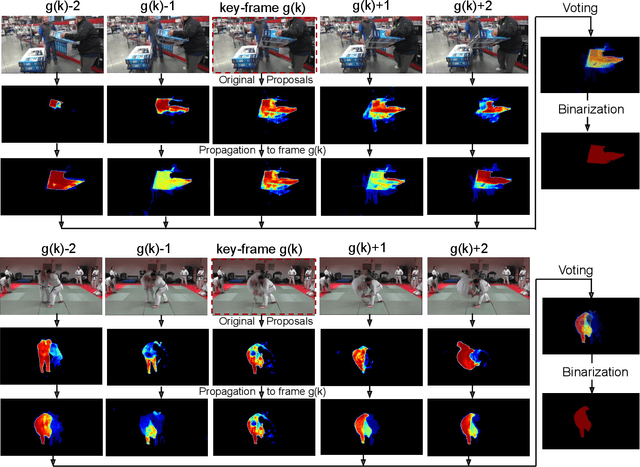

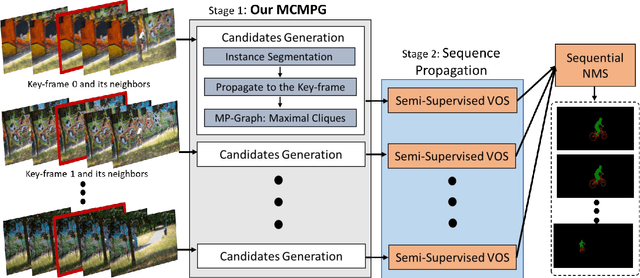

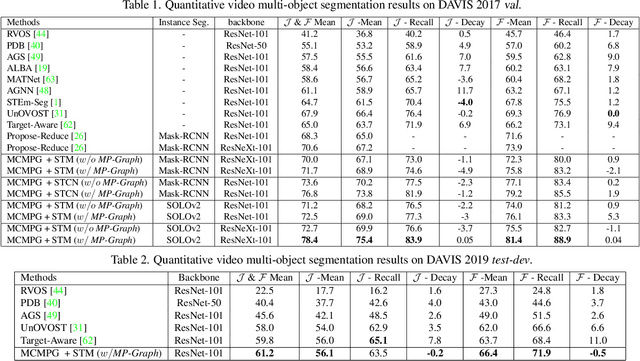

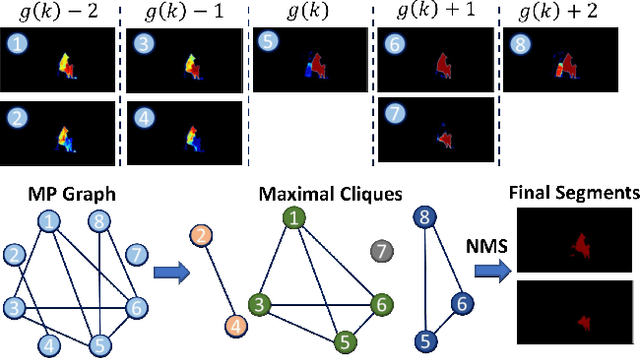

Unsupervised Video Object Segmentation (UVOS) aims at discovering objects and tracking them through videos. For accurate UVOS, we observe if one can locate precise segment proposals on key frames, subsequent processes are much simpler. Hence, we propose to reason about key frame proposals using a graph built with the object probability masks initially generated from multiple frames around the key frame and then propagated to the key frame. On this graph, we compute maximal cliques, with each clique representing one candidate object. By making multiple proposals in the clique to vote for the key frame proposal, we obtain refined key frame proposals that could be better than any of the single-frame proposals. A semi-supervised VOS algorithm subsequently tracks these key frame proposals to the entire video. Our algorithm is modular and hence can be used with any instance segmentation and semi-supervised VOS algorithm. We achieve state-of-the-art performance on the DAVIS-2017 validation and test-dev dataset. On the related problem of video instance segmentation, our method shows competitive performance with the previous best algorithm that requires joint training with the VOS algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge