MarineGym: Accelerated Training for Underwater Vehicles with High-Fidelity RL Simulation

Paper and Code

Oct 18, 2024

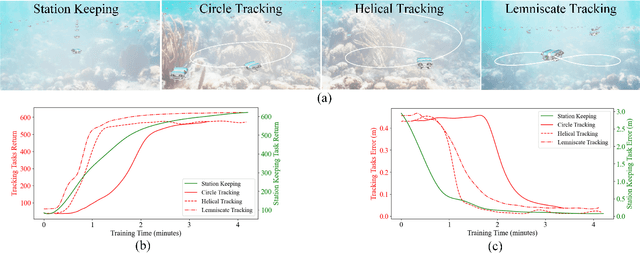

Reinforcement Learning (RL) is a promising solution, allowing Unmanned Underwater Vehicles (UUVs) to learn optimal behaviors through trial and error. However, existing simulators lack efficient integration with RL methods, limiting training scalability and performance. This paper introduces MarineGym, a novel simulation framework designed to enhance RL training efficiency for UUVs by utilizing GPU acceleration. MarineGym offers a 10,000-fold performance improvement over real-time simulation on a single GPU, enabling rapid training of RL algorithms across multiple underwater tasks. Key features include realistic dynamic modeling of UUVs, parallel environment execution, and compatibility with popular RL frameworks like PyTorch and TorchRL. The framework is validated through four distinct tasks: station-keeping, circle tracking, helical tracking, and lemniscate tracking. This framework sets the stage for advancing RL in underwater robotics and facilitating efficient training in complex, dynamic environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge