Lung Nodule Segmentation and Low-Confidence Region Prediction with Uncertainty-Aware Attention Mechanism

Paper and Code

Mar 19, 2023

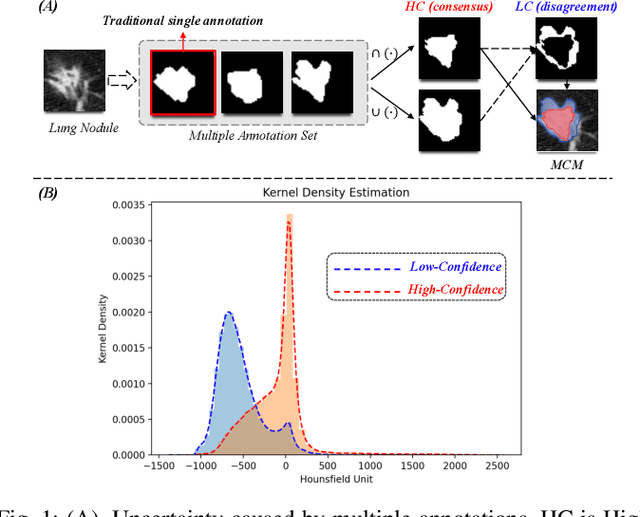

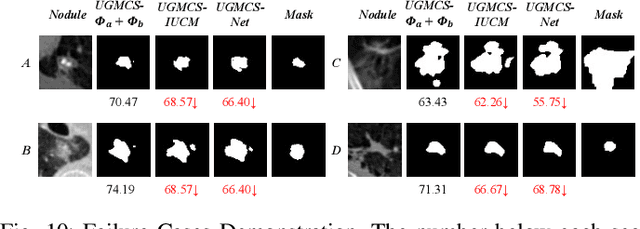

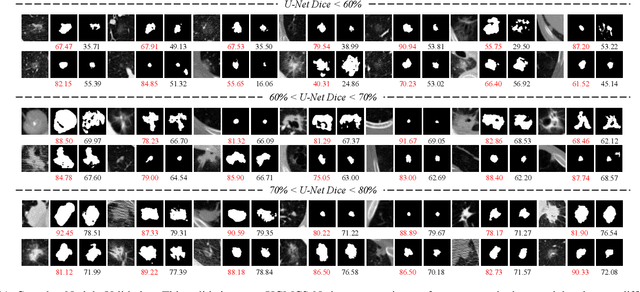

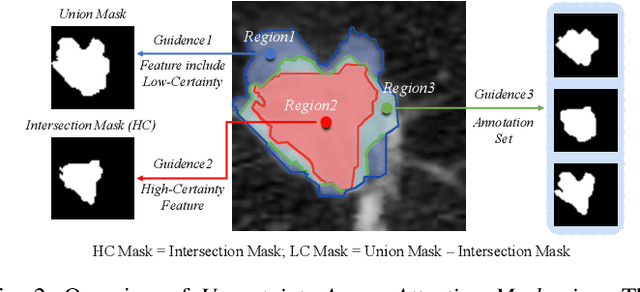

Radiologists have different training and clinical experiences, so they may provide various segmentation annotations for a lung nodule, which causes segmentation uncertainty among multiple annotations. Conventional methods usually chose a single annotation as the learning target or tried to learn a latent space of various annotations. Still, they wasted the valuable information of consensus or disagreements ingrained in the multiple annotations. This paper proposes an Uncertainty-Aware Attention Mechanism (UAAM), which utilizes consensus or disagreements among annotations to produce a better segmentation. In UAAM, we propose a Multi-Confidence Mask (MCM), which is a combination of a Low-Confidence (LC) Mask and a High-Confidence (HC) Mask. LC mask indicates regions with low segmentation confidence, which may cause different segmentation options among radiologists. Following UAAM, we further design an Uncertainty-Guide Segmentation Network (UGS-Net), which contains three modules:Feature Extracting Module captures a general feature of a lung nodule. Uncertainty-Aware Module produce three features for the annotations' union, intersection, and annotation set. Finally, Intersection-Union Constraining Module use distances between three features to balance the predictions of final segmentation, LC mask, and HC mask. To fully demonstrate the performance of our method, we propose a Complex Nodule Challenge on LIDC-IDRI, which tests UGS-Net's segmentation performance on the lung nodules that are difficult to segment by U-Net. Experimental results demonstrate that our method can significantly improve the segmentation performance on nodules with poor segmentation by U-Net.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge