Low-Rank Tensors for Multi-Dimensional Markov Models

Paper and Code

Nov 04, 2024

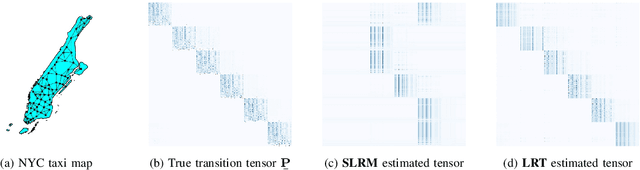

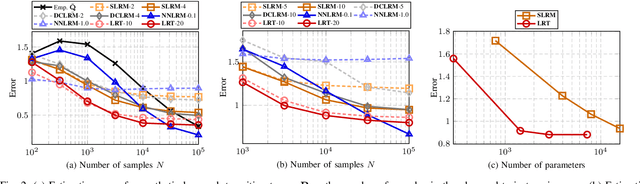

This work presents a low-rank tensor model for multi-dimensional Markov chains. A common approach to simplify the dynamical behavior of a Markov chain is to impose low-rankness on the transition probability matrix. Inspired by the success of these matrix techniques, we present low-rank tensors for representing transition probabilities on multi-dimensional state spaces. Through tensor decomposition, we provide a connection between our method and classical probabilistic models. Moreover, our proposed model yields a parsimonious representation with fewer parameters than matrix-based approaches. Unlike these methods, which impose low-rankness uniformly across all states, our tensor method accounts for the multi-dimensionality of the state space. We also propose an optimization-based approach to estimate a Markov model as a low-rank tensor. Our optimization problem can be solved by the alternating direction method of multipliers (ADMM), which enjoys convergence to a stationary solution. We empirically demonstrate that our tensor model estimates Markov chains more efficiently than conventional techniques, requiring both fewer samples and parameters. We perform numerical simulations for both a synthetic low-rank Markov chain and a real-world example with New York City taxi data, showcasing the advantages of multi-dimensionality for modeling state spaces.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge