Long-run User Value Optimization in Recommender Systems through Content Creation Modeling

Paper and Code

Apr 25, 2022

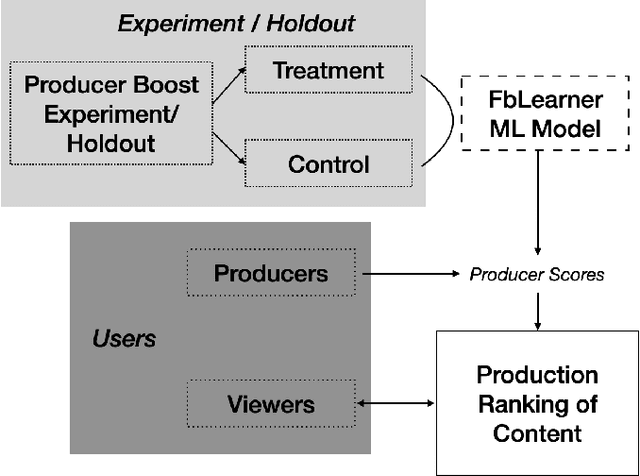

Content recommender systems are generally adept at maximizing immediate user satisfaction but to optimize for the \textit{long-run} user value, we need more statistically sophisticated solutions than off-the-shelf simple recommender algorithms. In this paper we lay out such a solution to optimize \textit{long-run} user value through discounted utility maximization and a machine learning method we have developed for estimating it. Our method estimates which content producers are most likely to create the highest long-run user value if their content is shown more to users who enjoy it in the present. We do this estimation with the help of an A/B test and heterogeneous effects machine learning model. We have used such models in Facebook's feed ranking system, and such a model can be used in other recommender systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge