LogicNet: A Logical Consistency Embedded Face Attribute Learning Network

Paper and Code

Nov 19, 2023

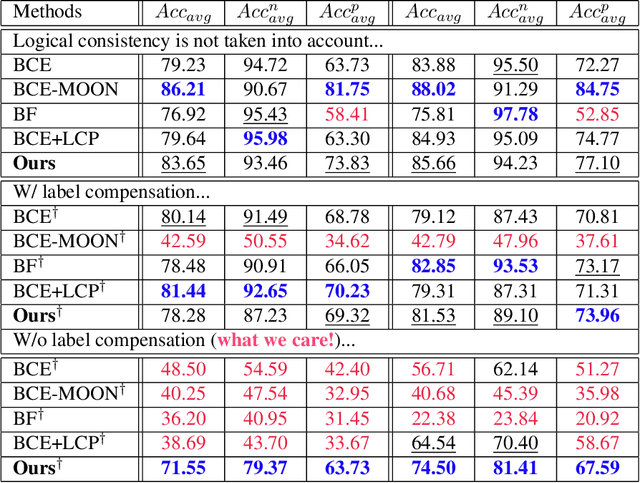

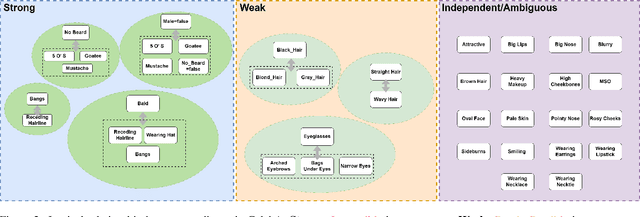

Ensuring logical consistency in predictions is a crucial yet overlooked aspect in multi-attribute classification. We explore the potential reasons for this oversight and introduce two pressing challenges to the field: 1) How can we ensure that a model, when trained with data checked for logical consistency, yields predictions that are logically consistent? 2) How can we achieve the same with data that hasn't undergone logical consistency checks? Minimizing manual effort is also essential for enhancing automation. To address these challenges, we introduce two datasets, FH41K and CelebA-logic, and propose LogicNet, an adversarial training framework that learns the logical relationships between attributes. Accuracy of LogicNet surpasses that of the next-best approach by 23.05%, 9.96%, and 1.71% on FH37K, FH41K, and CelebA-logic, respectively. In real-world case analysis, our approach can achieve a reduction of more than 50% in the average number of failed cases compared to other methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge