Lexicon Enhanced Chinese Sequence Labeling Using BERT Adapter

Paper and Code

May 20, 2021

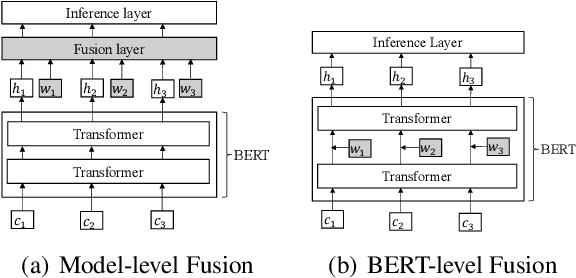

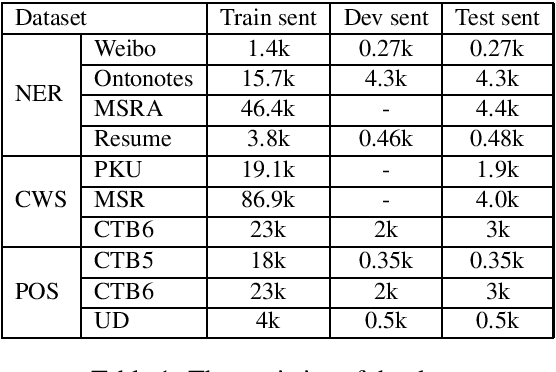

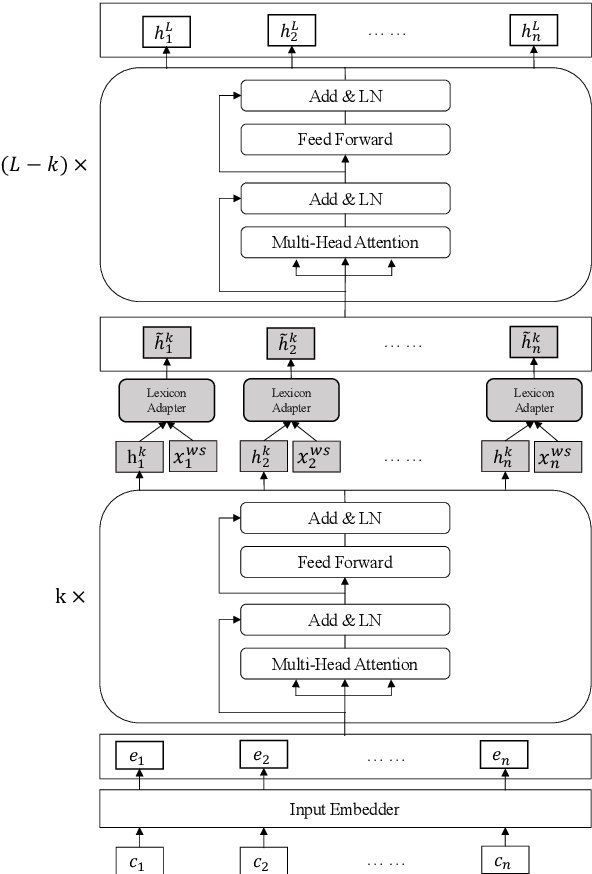

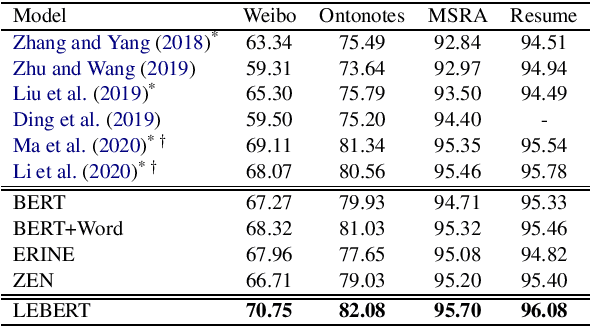

Lexicon information and pre-trained models, such as BERT, have been combined to explore Chinese sequence labelling tasks due to their respective strengths. However, existing methods solely fuse lexicon features via a shallow and random initialized sequence layer and do not integrate them into the bottom layers of BERT. In this paper, we propose Lexicon Enhanced BERT (LEBERT) for Chinese sequence labelling, which integrates external lexicon knowledge into BERT layers directly by a Lexicon Adapter layer. Compared with the existing methods, our model facilitates deep lexicon knowledge fusion at the lower layers of BERT. Experiments on ten Chinese datasets of three tasks including Named Entity Recognition, Word Segmentation, and Part-of-Speech tagging, show that LEBERT achieves the state-of-the-art results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge