Leveraging Subword Embeddings for Multinational Address Parsing

Paper and Code

Jun 29, 2020

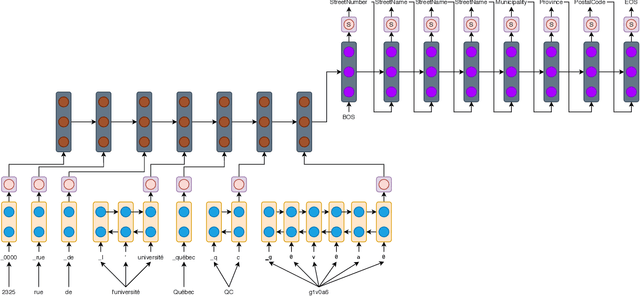

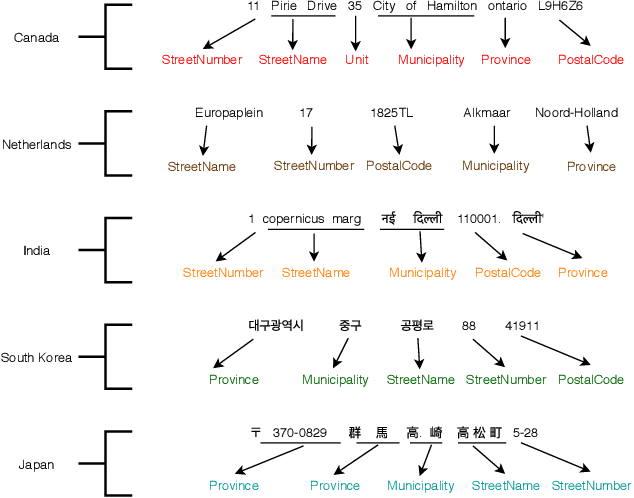

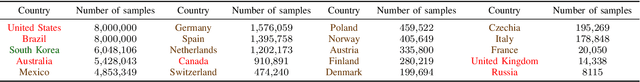

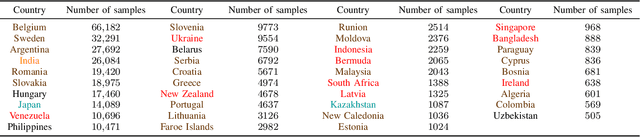

Address parsing consists of identifying the segments that make up an address such as a street name or a postal code. Because of its importance for tasks like record linkage, address parsing has been approached with many techniques. Neural network methods defined a new state-of-the-art for address parsing. While this approach yielded notable results, previous work has only focused on applying neural networks to achieve address parsing of addresses from one source country. We propose an approach in which we employ subword embeddings and a Recurrent Neural Network architecture to build a single model capable of learning to parse addresses from multiple countries at the same time while taking into account the difference in languages and address formatting systems. We achieved accuracies around 99 % on the countries used for training with no pre-processing nor post-processing needed. In addition, we explore the possibility of transferring the address parsing knowledge attained by training on some countries' addresses to others with no further training. This setting is also called zero-shot transfer learning. We achieve good results for 80 % of the countries (34 out of 41), almost 50 % of which (19 out of 41) is near state-of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge