Learning to Segment Human Body Parts with Synthetically Trained Deep Convolutional Networks

Paper and Code

Feb 02, 2021

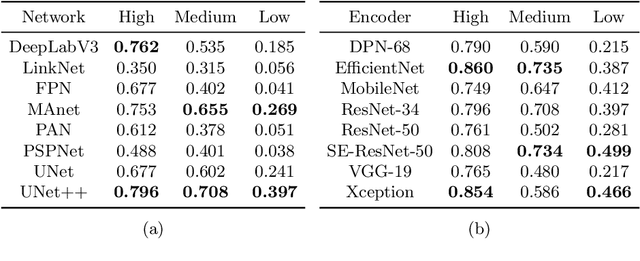

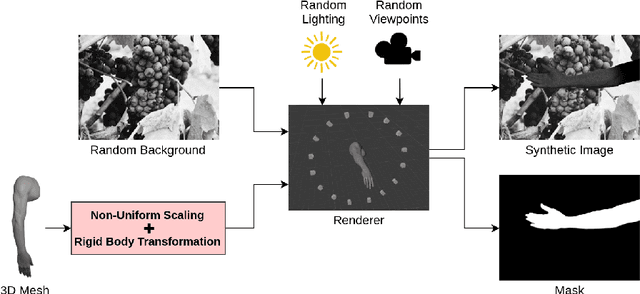

This paper presents a new framework for human body part segmentation based on Deep Convolutional Neural Networks trained using only synthetic data. The proposed approach achieves cutting-edge results without the need of training the models with real annotated data of human body parts. Our contributions include a data generation pipeline, that exploits a game engine for the creation of the synthetic data used for training the network, and a novel pre-processing module, that combines edge response map and adaptive histogram equalization to guide the network to learn the shape of the human body parts ensuring robustness to changes in the illumination conditions. For selecting the best candidate architecture, we performed exhaustive tests on manually-annotated images of real human body limbs. We further present an ablation study to validate our pre-processing module. The results show that our method outperforms several state-of-the-art semantic segmentation networks by a large margin. We release an implementation of the proposed approach along with the acquired datasets with this paper.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge