Learning to Disentangle GAN Fingerprint for Fake Image Attribution

Paper and Code

Jun 16, 2021

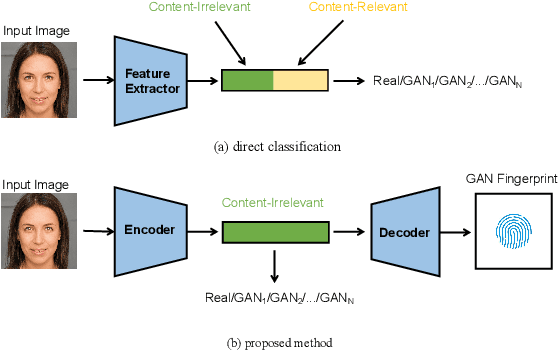

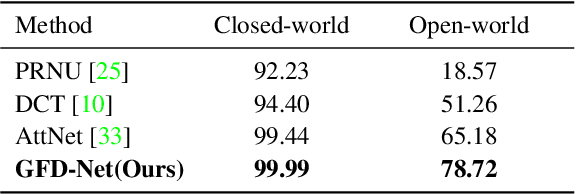

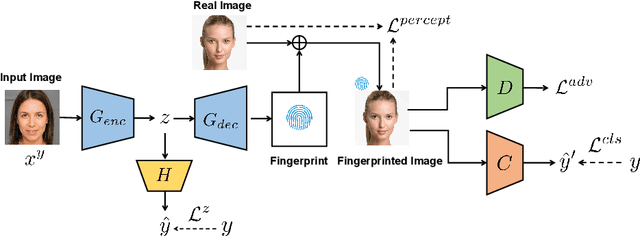

Rapid pace of generative models has brought about new threats to visual forensics such as malicious personation and digital copyright infringement, which promotes works on fake image attribution. Existing works on fake image attribution mainly rely on a direct classification framework. Without additional supervision, the extracted features could include many content-relevant components and generalize poorly. Meanwhile, how to obtain an interpretable GAN fingerprint to explain the decision remains an open question. Adopting a multi-task framework, we propose a GAN Fingerprint Disentangling Network (GFD-Net) to simultaneously disentangle the fingerprint from GAN-generated images and produce a content-irrelevant representation for fake image attribution. A series of constraints are provided to guarantee the stability and discriminability of the fingerprint, which in turn helps content-irrelevant feature extraction. Further, we perform comprehensive analysis on GAN fingerprint, providing some clues about the properties of GAN fingerprint and which factors dominate the fingerprint in GAN architecture. Experiments show that our GFD-Net achieves superior fake image attribution performance in both closed-world and open-world testing. We also apply our method in binary fake image detection and exhibit a significant generalization ability on unseen generators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge