Learning Theory for Inferring Interaction Kernels in Second-Order Interacting Agent Systems

Paper and Code

Oct 08, 2020

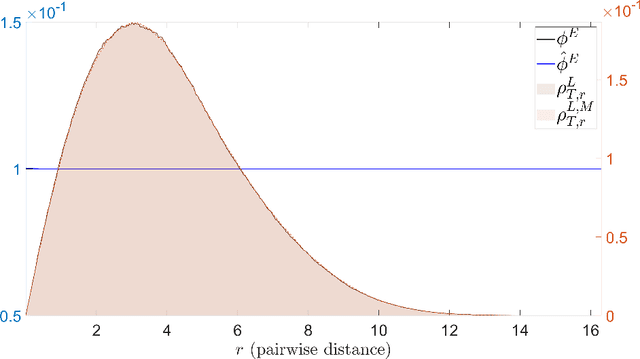

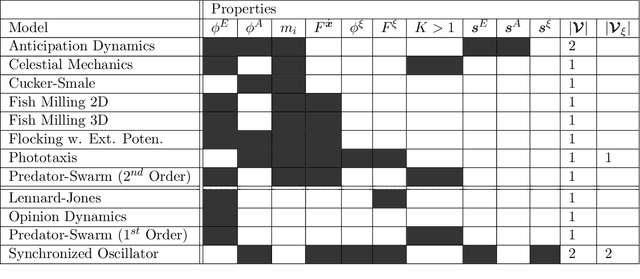

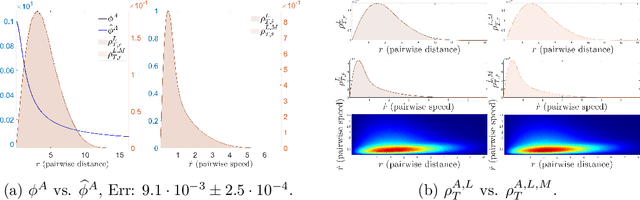

Modeling the complex interactions of systems of particles or agents is a fundamental scientific and mathematical problem that is studied in diverse fields, ranging from physics and biology, to economics and machine learning. In this work, we describe a very general second-order, heterogeneous, multivariable, interacting agent model, with an environment, that encompasses a wide variety of known systems. We describe an inference framework that uses nonparametric regression and approximation theory based techniques to efficiently derive estimators of the interaction kernels which drive these dynamical systems. We develop a complete learning theory which establishes strong consistency and optimal nonparametric min-max rates of convergence for the estimators, as well as provably accurate predicted trajectories. The estimators exploit the structure of the equations in order to overcome the curse of dimensionality and we describe a fundamental coercivity condition on the inverse problem which ensures that the kernels can be learned and relates to the minimal singular value of the learning matrix. The numerical algorithm presented to build the estimators is parallelizable, performs well on high-dimensional problems, and is demonstrated on complex dynamical systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge