Learning Multimodal Fixed-Point Weights using Gradient Descent

Paper and Code

Jul 16, 2019

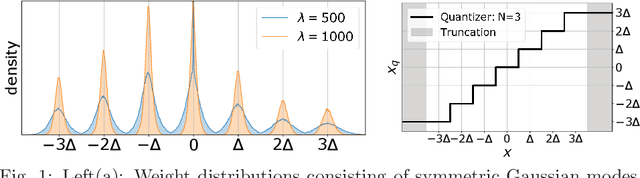

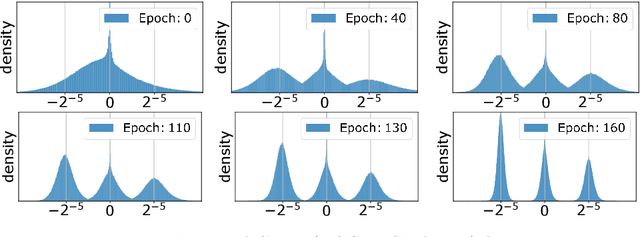

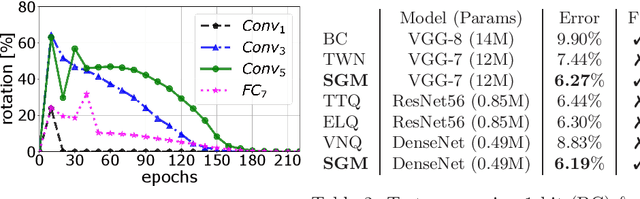

Due to their high computational complexity, deep neural networks are still limited to powerful processing units. To promote a reduced model complexity by dint of low-bit fixed-point quantization, we propose a gradient-based optimization strategy to generate a symmetric mixture of Gaussian modes (SGM) where each mode belongs to a particular quantization stage. We achieve 2-bit state-of-the-art performance and illustrate the model's ability for self-dependent weight adaptation during training.

* https://www.elen.ucl.ac.be/esann/proceedings/papers.php?ann=2019 * presented at ESANN 2019 (European Symposium on Artificial Neural

Networks, Computational Intelligence and Machine Learning)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge