Learning Models for Following Natural Language Directions in Unknown Environments

Paper and Code

Mar 17, 2015

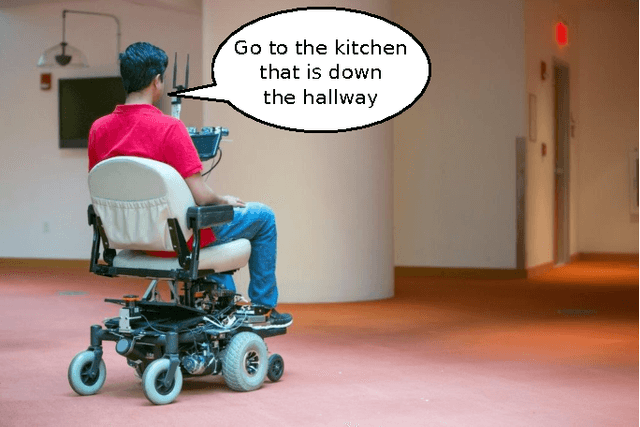

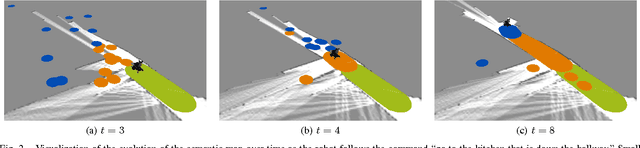

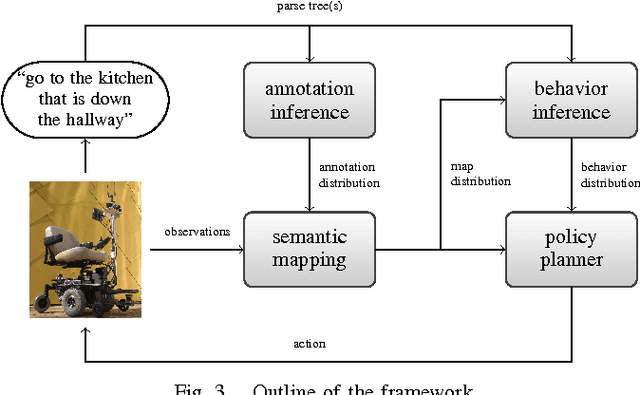

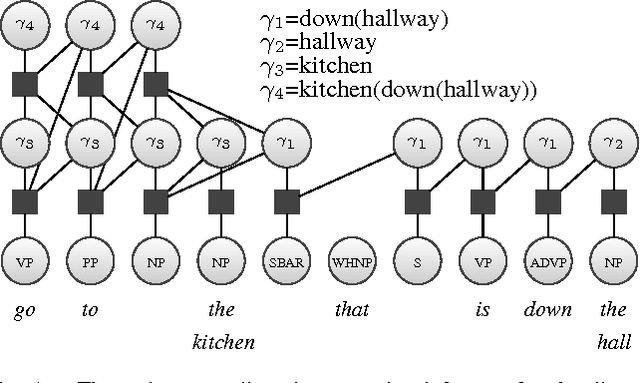

Natural language offers an intuitive and flexible means for humans to communicate with the robots that we will increasingly work alongside in our homes and workplaces. Recent advancements have given rise to robots that are able to interpret natural language manipulation and navigation commands, but these methods require a prior map of the robot's environment. In this paper, we propose a novel learning framework that enables robots to successfully follow natural language route directions without any previous knowledge of the environment. The algorithm utilizes spatial and semantic information that the human conveys through the command to learn a distribution over the metric and semantic properties of spatially extended environments. Our method uses this distribution in place of the latent world model and interprets the natural language instruction as a distribution over the intended behavior. A novel belief space planner reasons directly over the map and behavior distributions to solve for a policy using imitation learning. We evaluate our framework on a voice-commandable wheelchair. The results demonstrate that by learning and performing inference over a latent environment model, the algorithm is able to successfully follow natural language route directions within novel, extended environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge