Learning Landmark-Based Ensembles with Random Fourier Features and Gradient Boosting

Paper and Code

Jun 14, 2019

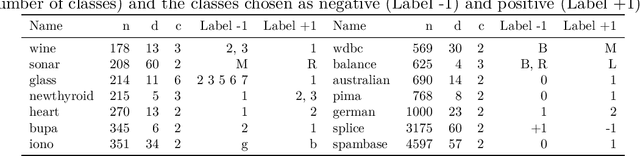

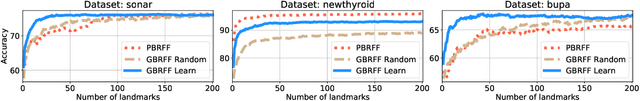

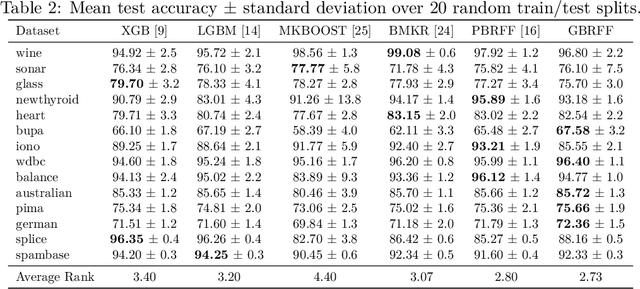

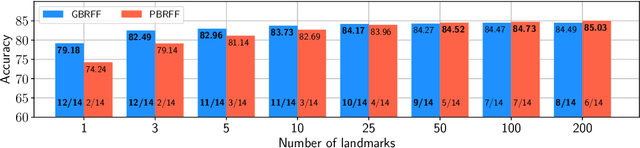

We propose a Gradient Boosting algorithm for learning an ensemble of kernel functions adapted to the task at hand. Unlike state-of-the-art Multiple Kernel Learning techniques that make use of a pre-computed dictionary of kernel functions to select from, at each iteration we fit a kernel by approximating it as a weighted sum of Random Fourier Features (RFF) and by optimizing their barycenter. This allows us to obtain a more versatile method, easier to setup and likely to have better performance. Our study builds on a recent result showing one can learn a kernel from RFF by computing the minimum of a PAC-Bayesian bound on the kernel alignment generalization loss, which is obtained efficiently from a closed-form solution. We conduct an experimental analysis to highlight the advantages of our method w.r.t. both Boosting-based and kernel-learning state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge