Learning Filter Banks Using Deep Learning For Acoustic Signals

Paper and Code

Nov 29, 2016

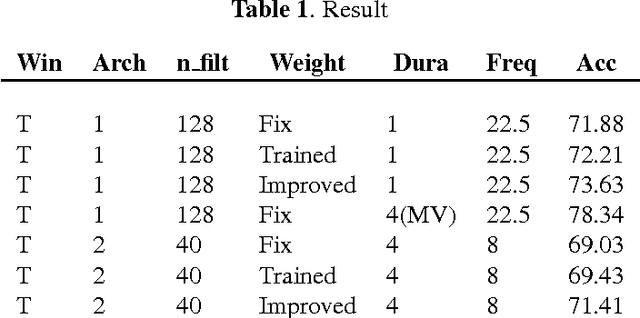

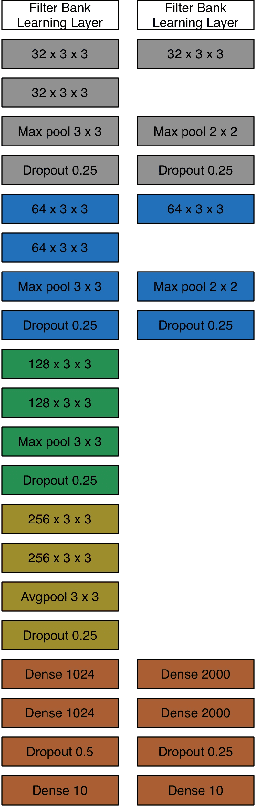

Designing appropriate features for acoustic event recognition tasks is an active field of research. Expressive features should both improve the performance of the tasks and also be interpret-able. Currently, heuristically designed features based on the domain knowledge requires tremendous effort in hand-crafting, while features extracted through deep network are difficult for human to interpret. In this work, we explore the experience guided learning method for designing acoustic features. This is a novel hybrid approach combining both domain knowledge and purely data driven feature designing. Based on the procedure of log Mel-filter banks, we design a filter bank learning layer. We concatenate this layer with a convolutional neural network (CNN) model. After training the network, the weight of the filter bank learning layer is extracted to facilitate the design of acoustic features. We smooth the trained weight of the learning layer and re-initialize it in filter bank learning layer as audio feature extractor. For the environmental sound recognition task based on the Urban- sound8K dataset, the experience guided learning leads to a 2% accuracy improvement compared with the fixed feature extractors (the log Mel-filter bank). The shape of the new filter banks are visualized and explained to prove the effectiveness of the feature design process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge